Welcome to our ShiftAdd-Based Networks project page!

Students: Haoran You, Huihong Shi, Yipin Guo, and Yang (Katie) Zhao

Multiplication, such as in convolutions, undoubtedly forms a cornerstone of modern deep neural networks (DNNs). However, the computational demands of intensive multiplications result in costly resource usage, posing challenges for deploying DNNs on resource-constrained edge devices. Consequently, various efforts have been made to devise multiplication-less deep networks.

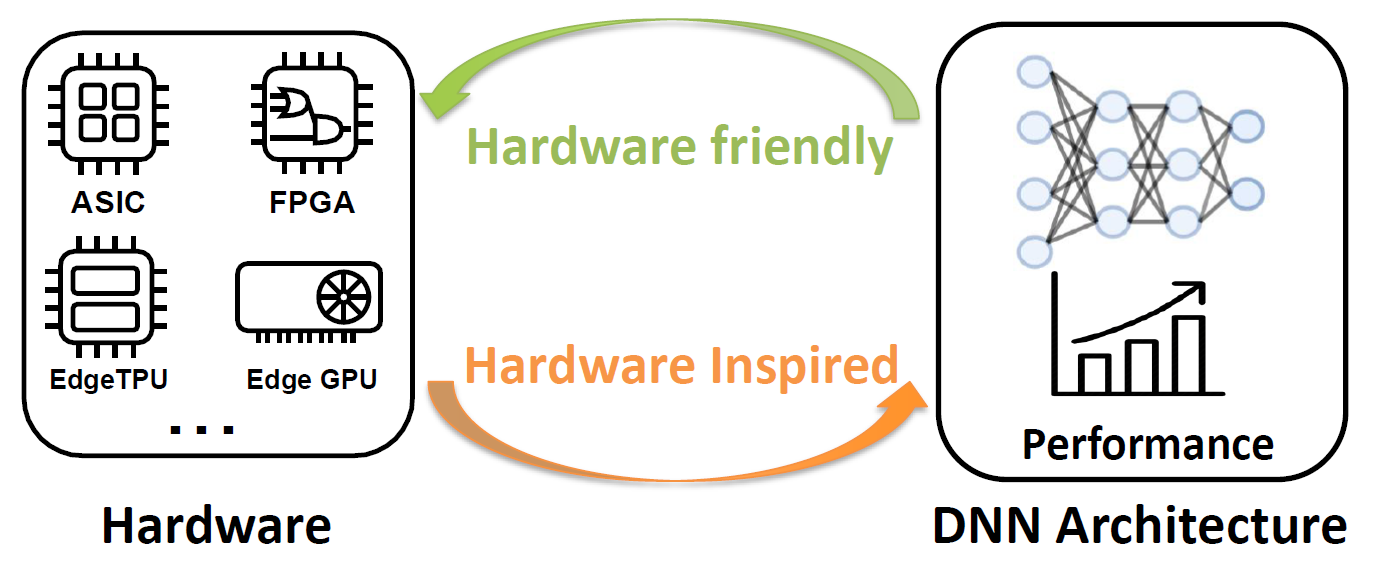

In pursuit of this objective, we introduce ShiftAdd-Based Networks, drawing primary inspiration from a prevalent practice in energy-efficient hardware design. Specifically, we embrace the notion that multiplication can be substituted by additions and logical bit-shifts. We harness this concept to meticulously parameterize deep networks, yielding a fresh category of DNNs that exclusively incorporates bit-shift and additive weight layers. This hardware-inspired ShiftAddNet promptly delivers both energy-efficient inference and training, all the while preserving expressive capacity akin to standard DNNs. The twin operation modalities, namely bit-shift and addition, additionally empower precise modulation of the model's learning capacity. This, in turn, permits a more pliable balance between accuracy and training efficiency, and enhances resilience to quantization and pruning.

This innovative paradigm has been successfully applied across diverse networks, encompassing Convolutional Neural Networks and Transformers alike. Furthermore, we have developed accelerated kernels to facilitate tangible speed enhancements on GPUs, along with tailor-made accelerators that unlock its full potential.

Corresponding Publications: