All Publications

Highlights

Projects

Topics

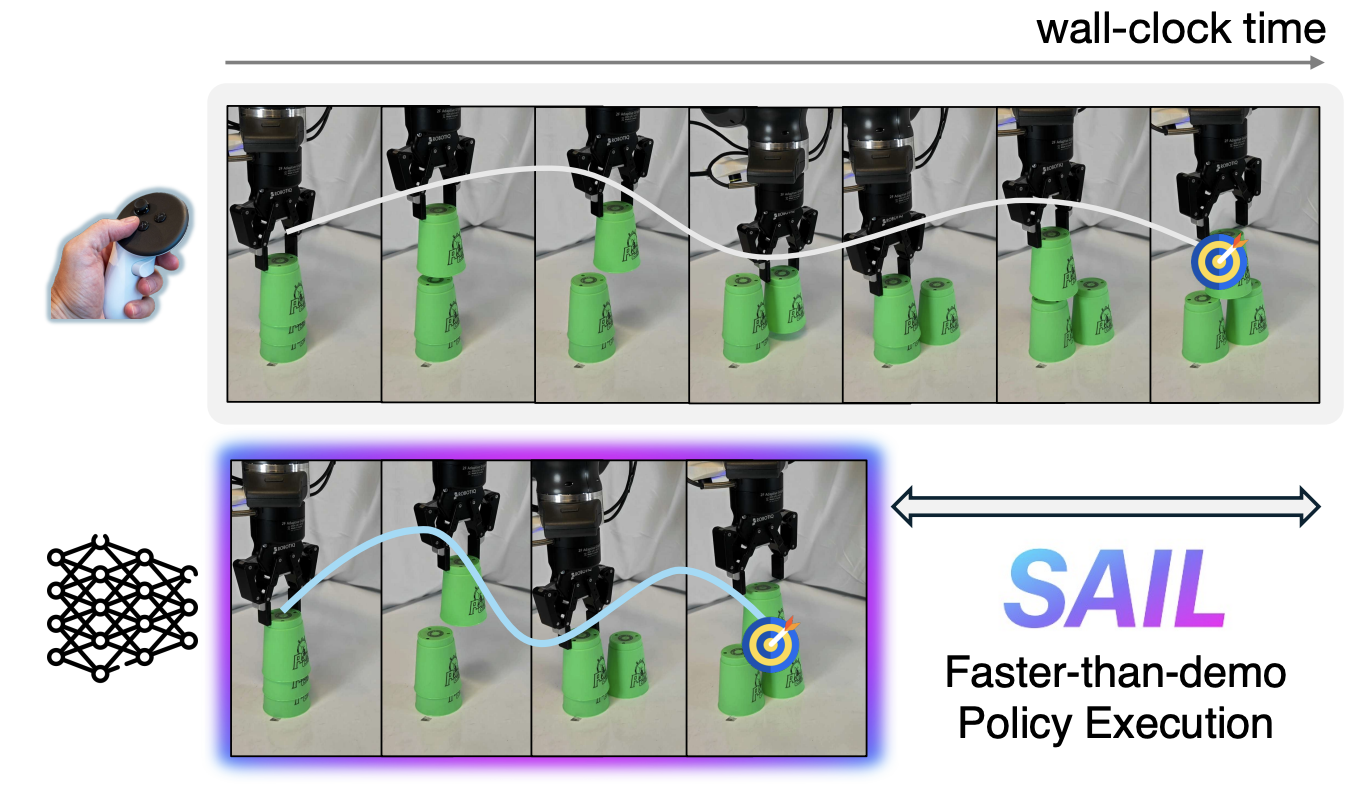

[CoRL 2025] SAIL: Faster-than-Demonstration Execution of Imitation Learning Policies

Nadun Ranawaka Arachchige, Zhenyang Chen, Wonsuhk Jung, Woo Chul Shin, Rohan Bansal, Pierre Barroso, Yu Hang He, Yingyan (Celine) Lin, Benjamin Joffe, Shreyas Kousik, Danfei Xu

[Abstract] [Paper]

Nadun Ranawaka Arachchige, Zhenyang Chen, Wonsuhk Jung, Woo Chul Shin, Rohan Bansal, Pierre Barroso, Yu Hang He, Yingyan (Celine) Lin, Benjamin Joffe, Shreyas Kousik, Danfei Xu

[Abstract] [Paper]

Offline Imitation Learning (IL) methods such as Behavior Cloning are effective at acquiring complex robotic manipulation skills. However, existing IL-trained policies are confined to executing the task at the same speed as shown in demonstration data. This limits the task throughput of a robotic system, a critical requirement for applications such as industrial automation. In this paper, we introduce and formalize the novel problem of enabling faster-than-demonstration execution of visuomotor policies and identify fundamental challenges in robot dynamics and state-action distribution shifts. We instantiate the key insights as SAIL (Speed Adaptation for Imitation Learning), a full-stack system integrating four tightly-connected components: (1) a consistency-preserving action inference algorithm for smooth motion at high speed, (2) high-fidelity tracking of controller-invariant motion targets, (3) adaptive speed modulation that dynamically adjusts execution speed based on motion complexity, and (4) action scheduling to handle real-world system latencies. Experiments on 12 tasks across simulation and two real, distinct robot platforms show that SAIL achieves up to a 4x speedup over demonstration speed in simulation and up to 3.2x speedup in the real world.

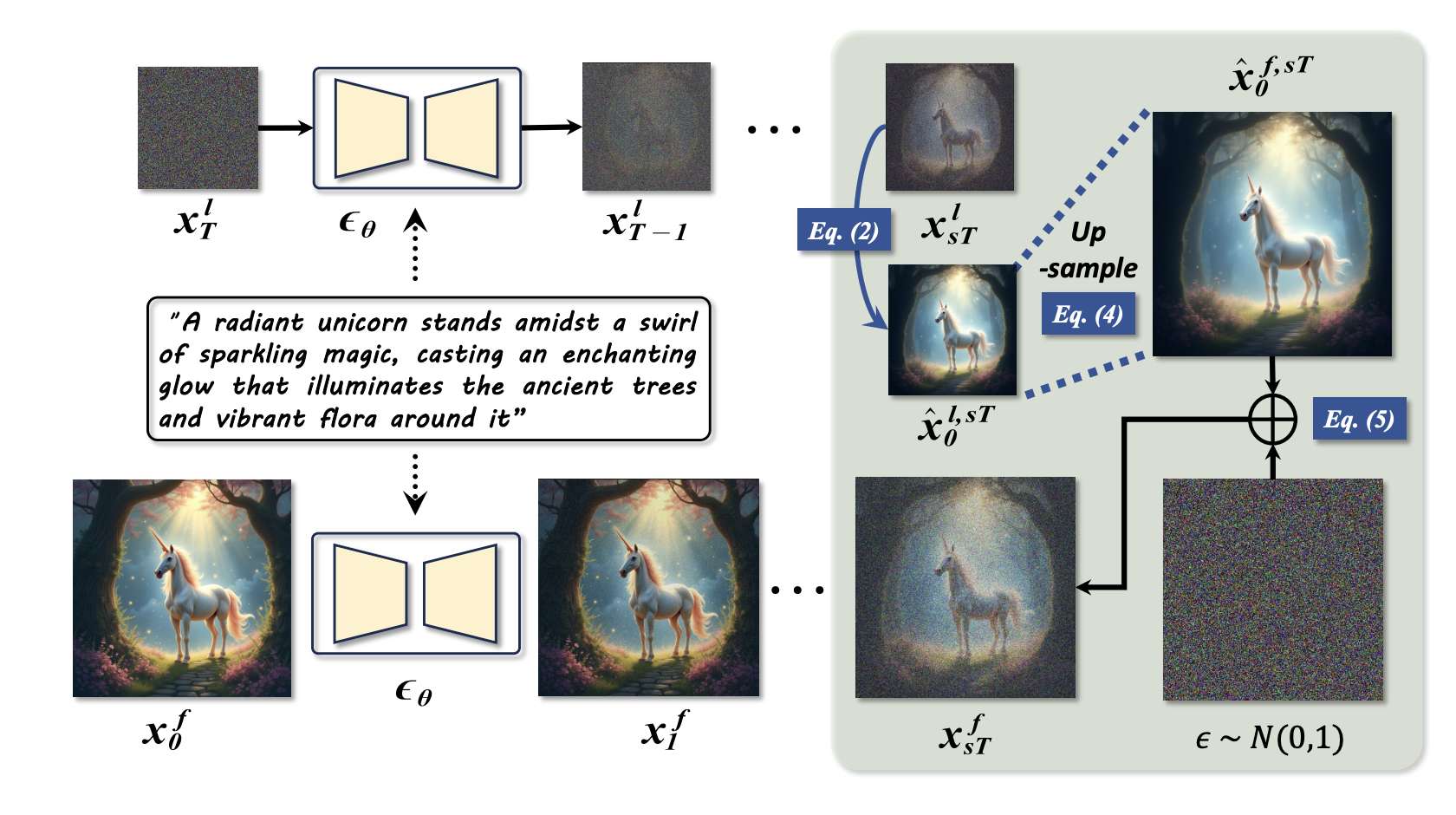

[ICCV 2025] Fewer Denoising Steps or Cheaper Per-Step Inference: Towards Compute-Optimal Diffusion Model Deployment

Zhenbang Du, Yonggan Fu, Lifu Wang, Jiayi Qian, Xiao Luo, Yingyan (Celine) Lin

[Abstract] [Paper]

Zhenbang Du, Yonggan Fu, Lifu Wang, Jiayi Qian, Xiao Luo, Yingyan (Celine) Lin

[Abstract] [Paper]

Diffusion models have shown remarkable success across generative tasks, yet their high computational demands challenge deployment on resource-limited platforms. This paper investigates a critical question for compute-optimal diffusion model deployment: Under a post-training setting without fine-tuning, is it more effective to reduce the number of denoising steps or to use a cheaper per-step inference? Intuitively, reducing the number of denoising steps increases the variability of the distributions across steps, making the model more sensitive to compression. In contrast, keeping more denoising steps makes the differences smaller, preserving redundancy, and making post-training compression more feasible. To systematically examine this, we propose PostDiff, a training-free framework for accelerating pre-trained diffusion models by reducing redundancy at both the input level and module level in a post-training manner. At the input level, we propose a mixed-resolution denoising scheme based on the insight that reducing generation resolution in early denoising steps can enhance low-frequency components and improve final generation fidelity. At the module level, we employ a hybrid module caching strategy to reuse computations across denoising steps. Extensive experiments and ablation studies demonstrate that (1) PostDiff can significantly improve the fidelity-efficiency trade-off of state-of-the-art diffusion models, and (2) to boost efficiency while maintaining decent generation fidelity, reducing per-step inference cost is often more effective than reducing the number of denoising steps.

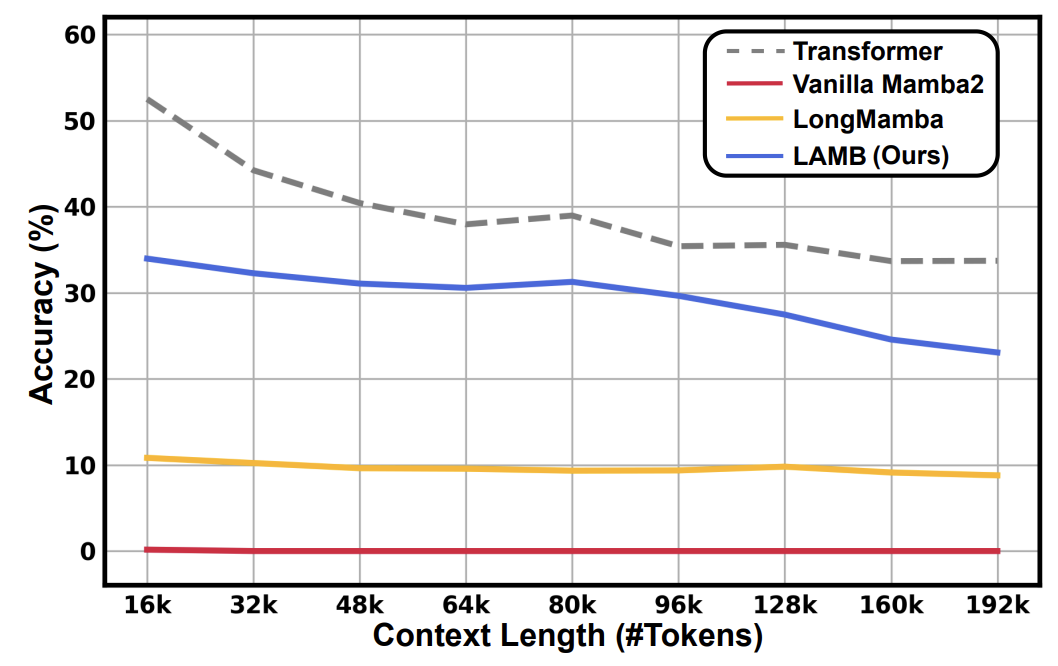

[ACL 2025] LAMB: A Training-Free Method to Enhance the Long-Context Understanding of SSMs via Attention-Guided Token Filtering

Zhifan Ye, Zheng Wang, Kejing Xia, Jihoon Hong, Leshu Li, Lexington Whalen, Cheng Wan, Yonggan Fu, Yingyan (Celine) Lin, Souvik Kundu

[Abstract] [Paper]

Zhifan Ye, Zheng Wang, Kejing Xia, Jihoon Hong, Leshu Li, Lexington Whalen, Cheng Wan, Yonggan Fu, Yingyan (Celine) Lin, Souvik Kundu

[Abstract] [Paper]

State space models (SSMs) achieve efficient

sub-quadratic compute complexity but often

exhibit significant performance drops as context length increases. Recent work attributes

this deterioration to an exponential decay in

hidden state memory. While token filtering has

emerged as a promising remedy, its underlying

rationale and limitations remain largely nonunderstood. In this paper, we first investigate

the attention patterns of Mamba to shed light

on why token filtering alleviates long-context

degradation. Motivated by these findings,

we propose LAMB, a training-free, attentionguided token filtering strategy designed to preserve critical tokens during inference. LAMB

can boost long-context performance for both

pure SSMs and hybrid models, achieving up to

an average improvement of 30.35% over stateof-the-art techniques on standard long-context

understanding benchmarks. Our analysis and

experiments reveal new insights into the interplay between attention, token selection, and

memory retention, and are thus expected to inspire broader applications of token filtering in

long-sequence modeling.

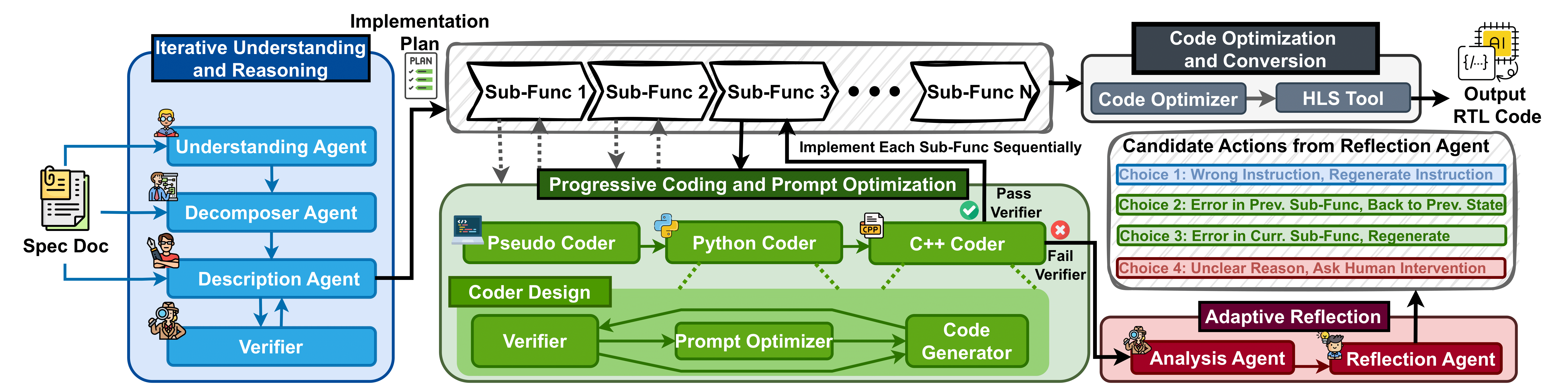

[LAD 2025] Spec2RTL-Agent: Automated Hardware Code Generation from Complex Specifications Using LLM Agent Systems

Zhongzhi Yu, Mingjie Liu, Michael Zimmer, Yingyan (Celine) Lin, Yong Liu, Haoxing Ren

[Abstract] [Paper]

Zhongzhi Yu, Mingjie Liu, Michael Zimmer, Yingyan (Celine) Lin, Yong Liu, Haoxing Ren

[Abstract] [Paper]

Despite recent progress in generating hardware RTL code with LLMs, existing solutions still suffer from a substantial gap between practical application scenarios and the requirements of real-world RTL code development. Prior approaches either focus on overly simplified hardware descriptions or depend on extensive human guidance to process complex specifications, limiting their scalability and automation potential. In this paper, we address this gap by proposing an LLM agent system, termed Spec2RTL-Agent, designed to directly process complex specification documentation and generate corresponding RTL code implementations, advancing LLM-based RTL code generation toward more realistic application settings. To achieve this goal, Spec2RTL-Agent introduces a novel multi-agent collaboration framework that integrates three key enablers: (1) a reasoning and understanding module that translates specifications into structured, step-by-step implementation plans; (2) a progressive coding and prompt optimization module that iteratively refines the code across multiple representations to enhance correctness and synthesisability for RTL conversion; and (3) an adaptive reflection module that identifies and traces the source of errors during generation, ensuring a more robust code generation flow. Instead of directly generating RTL from natural language, our system strategically generates synthesizable C++ code, which is then optimized for HLS. This agent-driven refinement ensures greater correctness and compatibility compared to naive direct RTL generation approaches. We evaluate Spec2RTL-Agent on three specification documents, showing it generates accurate RTL code with up to 75% fewer human interventions than existing methods. This highlights its role as the first fully automated multi-agent system for RTL generation from unstructured specs, reducing reliance on human effort in hardware design.

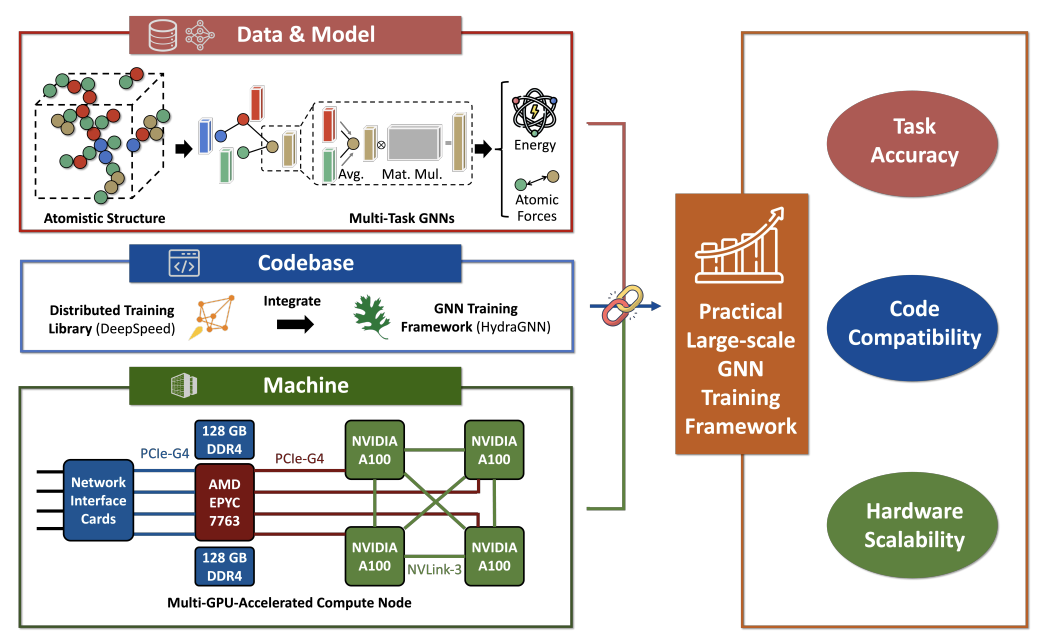

[DAC 2025] Scaling Laws of Graph Neural Networks for Atomistic Materials Modeling

Chaojian Li, Zhifan Ye, Massimiliano Lupo Pasini, Jong Youl Choi, Cheng Wan, Yingyan (Celine) Lin, Prasanna Balaprakash

[Abstract] [Paper]

Chaojian Li, Zhifan Ye, Massimiliano Lupo Pasini, Jong Youl Choi, Cheng Wan, Yingyan (Celine) Lin, Prasanna Balaprakash

[Abstract] [Paper]

Atomistic materials modeling is a critical task with wide-ranging applications, from drug discovery to materials science, where accurate predictions of the target material property can lead to significant advancements in scientific discovery. Graph Neural Networks (GNNs) represent the state-of-the-art approach for modeling atomistic material data thanks to their capacity to capture complex relational structures. While machine learning performance has historically improved with larger models and datasets, GNNs for atomistic materials modeling remain relatively small compared to large language models (LLMs), which leverage billions of parameters and terabyte-scale datasets to achieve remarkable performance in their respective domains. To address this gap, we explore the scaling limits of GNNs for atomistic materials modeling by developing a foundational model with billions of parameters, trained on extensive datasets in terabyte-scale. Our approach incorporates techniques from LLM libraries to efficiently manage large-scale data and models, enabling both effective training and deployment of these large-scale GNN models. This work addresses three fundamental questions in scaling GNNs: the potential for scaling GNN model architectures, the effect of dataset size on model accuracy, and the applicability of LLM-inspired techniques to GNN architectures. Specifically, the outcomes of this study include (1) insights into the scaling laws for GNNs, highlighting the relationship between model size, dataset volume, and accuracy, (2) a foundational GNN model optimized for atomistic materials modeling, and (3) a GNN codebase enhanced with advanced LLM-based training techniques. Our findings lay the groundwork for large-scale GNNs with billions of parameters and terabyte-scale datasets, establishing a scalable pathway for future advancements in atomistic materials modeling.

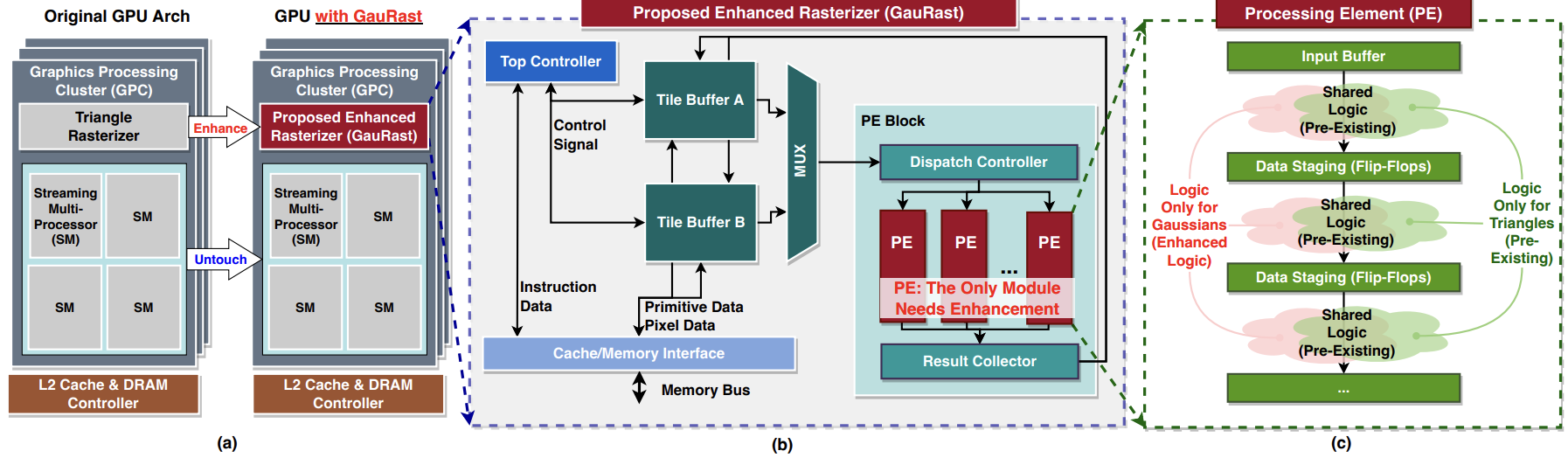

[DAC 2025] GauRast: Enhancing GPU Triangle Rasterizers to Accelerate 3D Gaussian Splatting

Sixu Li, Ben Keller, Yingyan (Celine) Lin, Brucek Khailany

[Abstract] [Paper]

Sixu Li, Ben Keller, Yingyan (Celine) Lin, Brucek Khailany

[Abstract] [Paper]

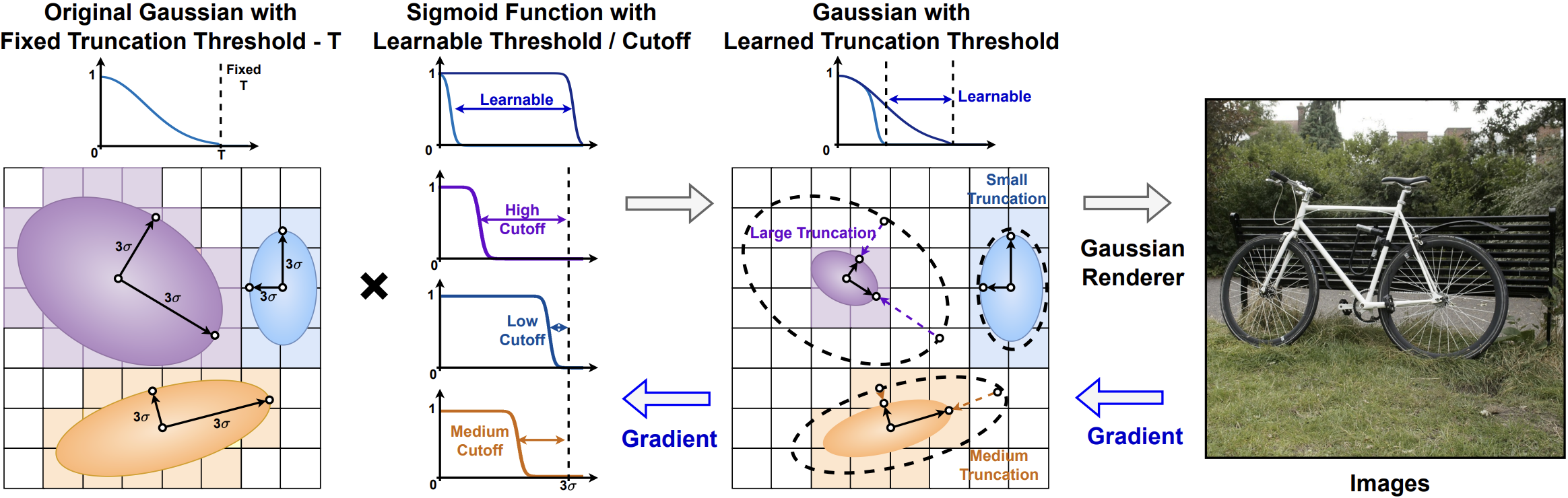

3D intelligence leverages rich 3D features and stands as a promising frontier in AI, with 3D rendering fundamental to many downstream applications. 3D Gaussian Splatting (3DGS), an emerging high-quality 3D rendering method, requires significant computation, making real-time execution on existing GPU-equipped edge devices infeasible. Previous efforts to accelerate 3DGS rely on dedicated accelerators that require substantial integration overhead and hardware costs. This work proposes an acceleration strategy that leverages the similarities between the 3DGS pipeline and the highly optimized conventional graphics pipeline in modern GPUs. Instead of developing a dedicated accelerator, we enhance existing GPU rasterizer hardware to efficiently support 3DGS operations. Our results demonstrate a 23× increase in processing speed and a 24× reduction in energy consumption, with improvements yielding 6× faster end-to-end runtime for the original 3DGS algorithm and 4× for the latest efficiency-improved pipeline, achieving 24 FPS and 46 FPS respectively. These enhancements incur only a minimal area overhead of 0.2% relative to the entire SoC chip area, underscoring the practicality and efficiency of our approach for enabling 3DGS rendering on resource-constrained platforms.

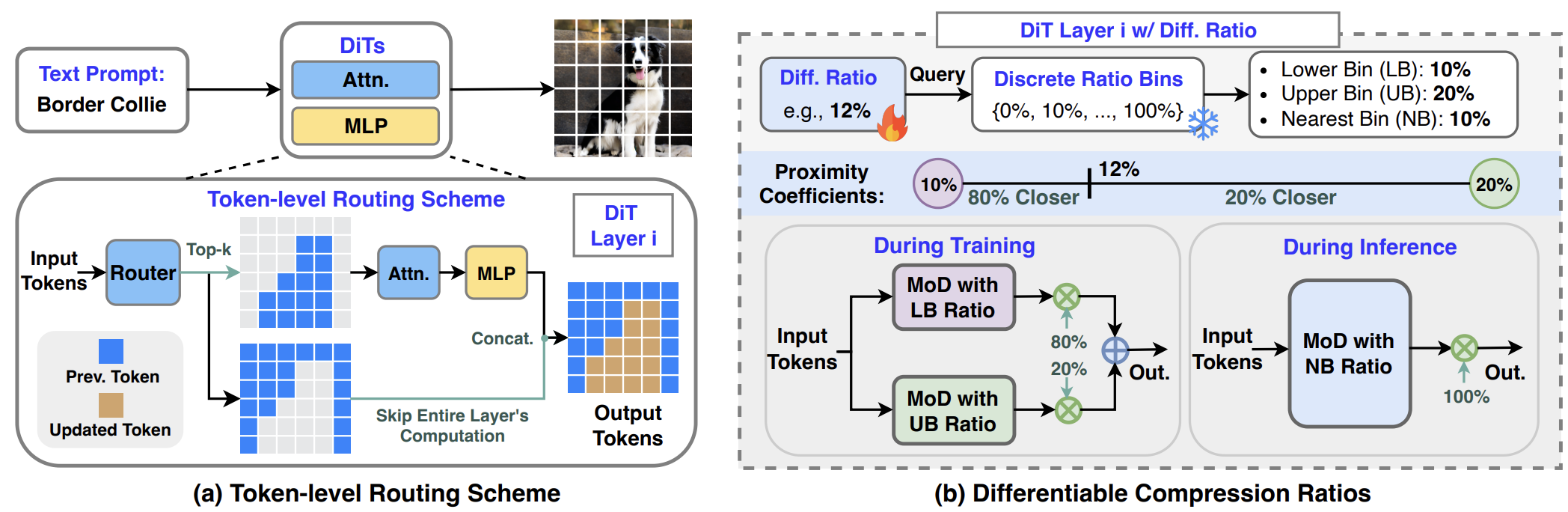

[CVPR 2025] Layer- and Timestep-Adaptive Differentiable Token Compression Ratios for Efficient Diffusion Transformers

Haoran You, Connelly Barnes, Yuqian Zhou, Yan Kang, Zhenbang Du, Wei Zhou, Lingzhi Zhang, Yotam Nitzan, Xiaoyang Liu, Zhe Lin, Eli Shechtman, Sohrab Amirghodsi, Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Project Page ]

Haoran You, Connelly Barnes, Yuqian Zhou, Yan Kang, Zhenbang Du, Wei Zhou, Lingzhi Zhang, Yotam Nitzan, Xiaoyang Liu, Zhe Lin, Eli Shechtman, Sohrab Amirghodsi, Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Project Page ]

Diffusion Transformers (DiTs) have achieved state-of-the-art (SOTA) image generation quality but suffer from high latency and memory inefficiency, making them difficult to deploy on resource-constrained devices. One major efficiency bottleneck is that existing DiTs apply equal computation across all regions of an image. However, not all image tokens are equally important, and certain localized areas require more computation, such as objects. To address this, we propose DiffCR, a dynamic DiT inference framework with differentiable compression ratios, which automatically learns to dynamically route computation across layers and timesteps for each image token, resulting in efficient DiTs. Specifically, DiffCR integrates three features: (1) A token-level routing scheme where each DiT layer includes a router that is fine-tuned jointly with model weights to predict token importance scores. In this way, unimportant tokens bypass the entire layer's computation; (2) A layer-wise differentiable ratio mechanism where different DiT layers automatically learn varying compression ratios from a zero initialization, resulting in large compression ratios in redundant layers while others remain less compressed or even uncompressed; (3) A timestep-wise differentiable ratio mechanism where each denoising timestep learns its own compression ratio. The resulting pattern shows higher ratios for noisier timesteps and lower ratios as the image becomes clearer. Extensive experiments on text-to-image and inpainting tasks show that DiffCR effectively captures dynamism across token, layer, and timestep axes, achieving superior trade-offs between generation quality and efficiency compared to prior works.

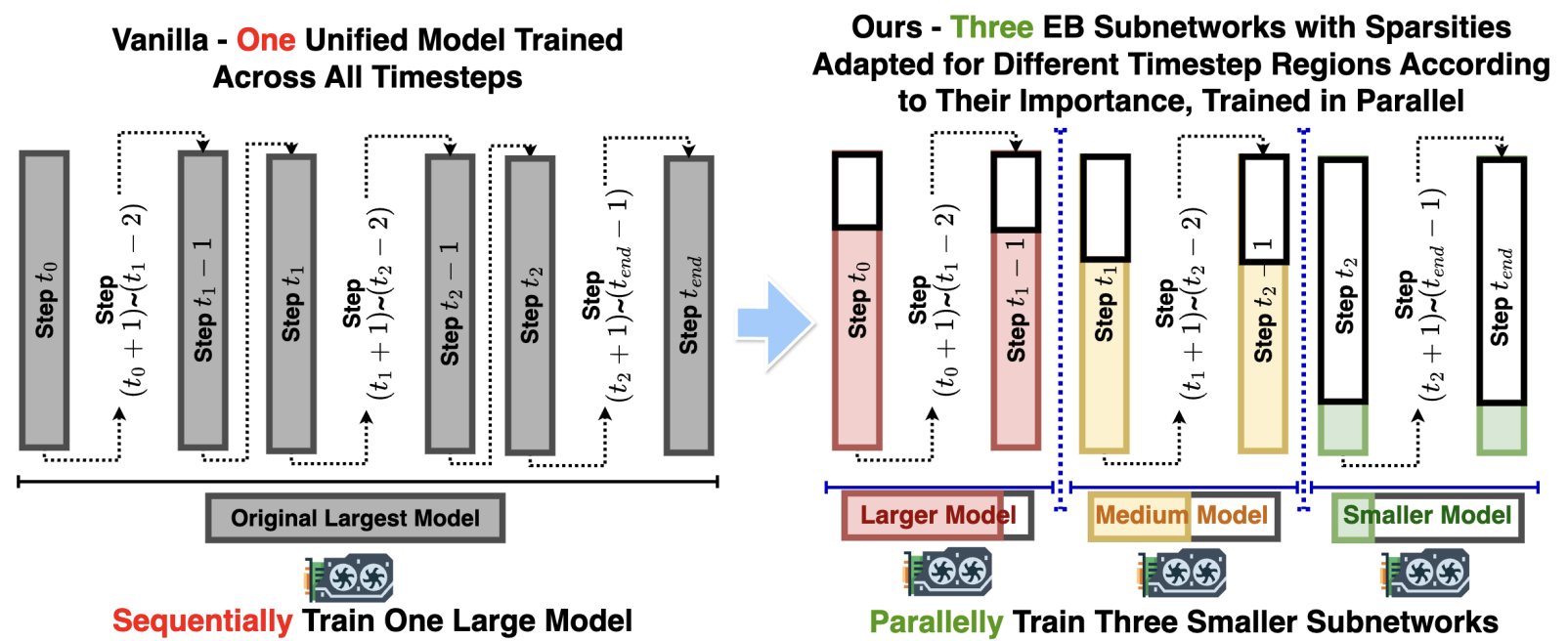

[CVPR 2025] Early-Bird Diffusion: Investigating and Leveraging Timestep-Aware Early-Bird Tickets in Diffusion Models for Efficient Training

Lexington Whalen, Zhenbang Du, Haoran You, Chaojian Li, Sixu Li, Yingyan (Celine) Lin

[Abstract] [Paper] [Code]

Lexington Whalen, Zhenbang Du, Haoran You, Chaojian Li, Sixu Li, Yingyan (Celine) Lin

[Abstract] [Paper] [Code]

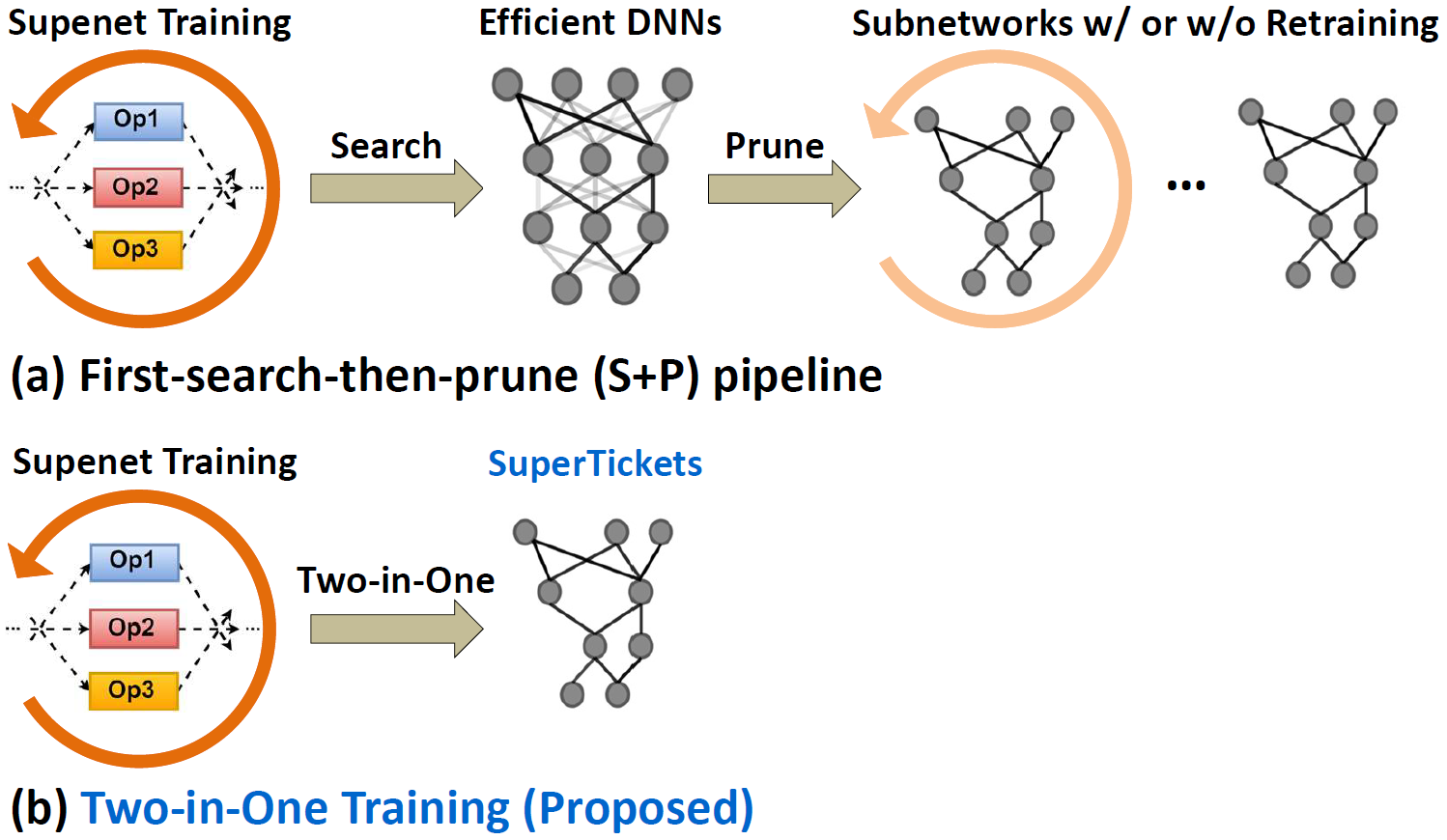

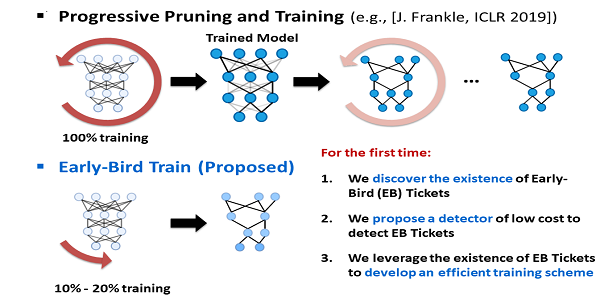

Training diffusion models (DMs) requires substantial computational resources due to multiple forward and backward passes across numerous timesteps, motivating research into efficient training techniques. In this paper, we propose EB-Diff-Train, a new efficient DM training approach that is orthogonal to other methods of accelerating DM training, by investigating and leveraging Early-Bird (EB) tickets -- sparse subnetworks that manifest early in the training process and maintain high generation quality. We first investigate the existence of traditional EB tickets in DMs, enabling competitive generation quality without fully training a dense model. Then, we delve into the concept of diffusion-dedicated EB tickets, drawing on insights from varying importance of different timestep regions. These tickets adapt their sparsity levels according to the importance of corresponding timestep regions, allowing for aggressive sparsity during non-critical regions while conserving computational resources for crucial timestep regions. Building on this, we develop an efficient DM training technique that derives timestep-aware EB tickets, trains them in parallel, and combines them during inference for image generation. Extensive experiments validate the existence of both traditional and timestep-aware EB tickets, as well as the effectiveness of our proposed EB-Diff-Train method. This approach can significantly reduce training time both spatially and temporally -- achieving 2.9× to 5.8× speedups over training unpruned dense models, and up to 10.3× faster training compared to standard train-prune-finetune pipelines -- without compromising generative quality.

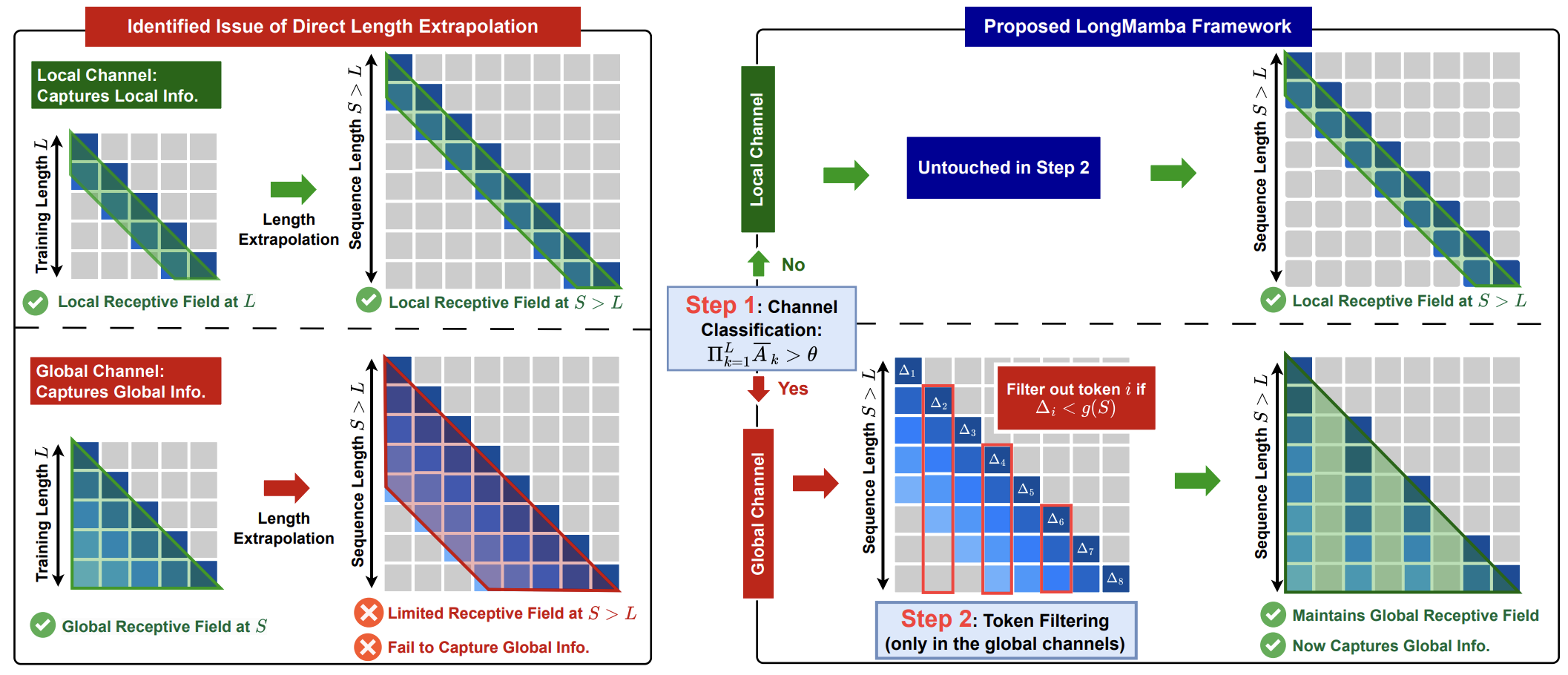

[ICLR 2025] LongMamba: Enhancing Mamba's Long-Context Capabilities via Training-Free Receptive Field Enlargement

Zhifan Ye, Kejing Xia, Yonggan Fu, Xin Dong, Jihoon Hong, Xiangchi Yuan, Shizhe Diao, Jan Kautz, Pavlo Molchanov, Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Project Page ]

Zhifan Ye, Kejing Xia, Yonggan Fu, Xin Dong, Jihoon Hong, Xiangchi Yuan, Shizhe Diao, Jan Kautz, Pavlo Molchanov, Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Project Page ]

State space models (SSMs) have emerged as an efficient alternative to Transformer models for language modeling, offering linear computational complexity and constant memory usage as context length increases. However, despite their efficiency in handling long contexts, recent studies have shown that SSMs, such as Mamba models, generally underperform compared to Transformers in long-context understanding tasks. To address this significant shortfall and achieve both efficient and accurate long-context understanding, we propose LongMamba, a training-free technique that significantly enhances the long-context capabilities of Mamba models. LongMamba builds on our discovery that the hidden channels in Mamba can be categorized into local and global channels based on their receptive field lengths, with global channels primarily responsible for long-context capability. These global channels can become the key bottleneck as the input context lengthens. Specifically, when input lengths largely exceed the training sequence length, global channels exhibit limitations in adaptively extend their receptive fields, leading to Mamba's poor long-context performance. The key idea of LongMamba is to mitigate the hidden state memory decay in these global channels by preventing the accumulation of unimportant tokens in their memory. This is achieved by first identifying critical tokens in the global channels and then applying token filtering to accumulate only those critical tokens. Through extensive benchmarking across synthetic and real-world long-context scenarios, LongMamba sets a new standard for Mamba's long-context performance, significantly extending its operational range without requiring additional training.

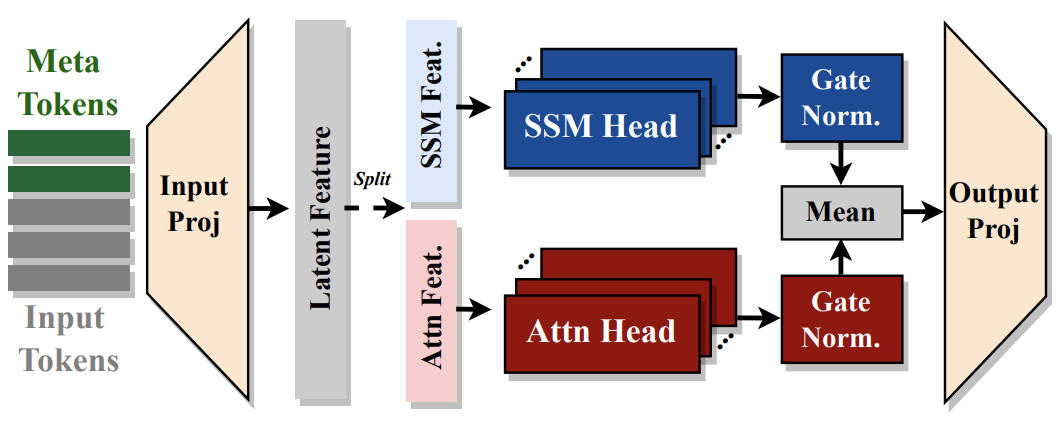

[ICLR 2025] Hymba: A Hybrid-head Architecture for Small Language Models

Xin Dong, Yonggan Fu, Shizhe Diao, Wonmin Byeon, Zijia Chen, Ameya Sunil, Mahabaleshwarkar, Shih-Yang Liu, Matthijs Van Keirsbilck, Min-Hung Chen, Yoshi Suhara, Yingyan (Celine) Lin, Jan Kautz, Pavlo Molchanov

[Abstract] [Paper] [Code]

Xin Dong, Yonggan Fu, Shizhe Diao, Wonmin Byeon, Zijia Chen, Ameya Sunil, Mahabaleshwarkar, Shih-Yang Liu, Matthijs Van Keirsbilck, Min-Hung Chen, Yoshi Suhara, Yingyan (Celine) Lin, Jan Kautz, Pavlo Molchanov

[Abstract] [Paper] [Code]

We propose Hymba, a family of small language models featuring a hybrid-head parallel architecture that integrates transformer attention mechanisms with state space models (SSMs) for enhanced efficiency. Attention heads provide high-resolution recall, while SSM heads enable efficient context summarization. Additionally, we introduce learnable meta tokens that are prepended to prompts, storing critical information and alleviating the “forced-to-attend” burden associated with attention mechanisms. This model is further optimized by incorporating cross-layer key-value (KV) sharing and partial sliding window attention, resulting in a compact cache size. During development, we conducted a controlled study comparing various architectures under identical settings and observed significant advantages of our proposed architecture. Notably, Hymba achieves state-of-the-art results for small LMs: Our Hymba-1.5B-Base model surpasses all sub-2B public models in performance and even outperforms Llama-3.2-3B with 1.32% higher average accuracy, an 11.67× cache size reduction, and 3.49× throughput.

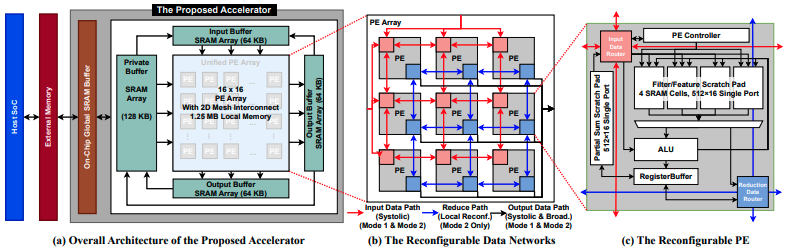

Recent advancements in neural rendering technologies and their supporting devices have paved the way for immersive 3D experiences, significantly transforming human interaction with intelligent devices across diverse applications. However, achieving the desired real-time rendering speeds for immersive interactions is still hindered by (1) the lack of a universal algorithmic solution for different application scenarios and (2) the dedication of existing devices or accelerators to merely specific rendering pipelines. To overcome this challenge, we have developed a unified neural rendering accelerator that caters to a wide array of typical neural rendering pipelines, enabling real-time and on-device rendering across different applications while maintaining both efficiency and compatibility. Our accelerator design is based on the insight that, although neural rendering pipelines vary and their algorithm designs are continually evolving, they typically share common operators, predominantly executing similar workloads. Building on this insight, we propose a reconfigurable hardware architecture that can dynamically adjust dataflow to align with specific rendering metric requirements for diverse applications, effectively supporting both typical and the latest hybrid rendering pipelines. Benchmarking experiments and ablation studies on both synthetic and real-world scenes demonstrate the effectiveness of the proposed accelerator. The proposed unified accelerator stands out as the first solution capable of achieving real-time neural rendering across varied representative pipelines on edge devices, potentially paving the way for the next generation of neural graphics applications.

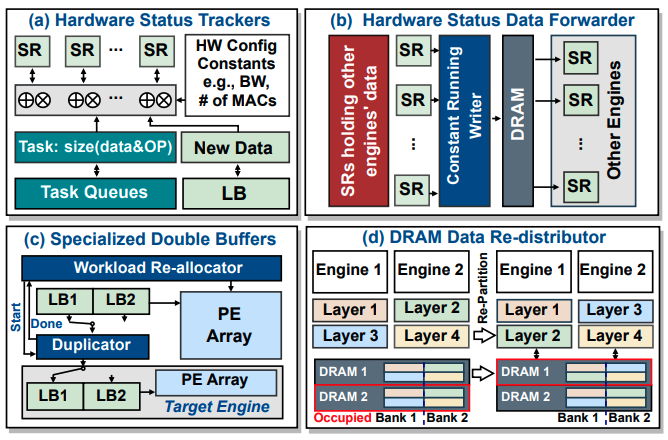

Real-time Codec Avatars, which employ deep generative models for 3D reconstruction of human features, are crucial for immersive telepresence in AR/VR environments. However, deploying these avatars in real-time on AR/VR headsets is challenging due to the inability of existing devices to achieve satisfying performance within stringent hardware resource constraints. To address these challenges, we introduce Re-CATA, an innovative full-stack and flexible Codec Avatar accelerator design framework. Re-CATA is designed to deliver real-time throughput (greater than 120 FPS) for the complete Codec Avatar processing pipeline within an edge-level power budget of 5 W under FPGA prototyping. Our approach begins by abstracting the operation mapping and scheduling challenges inherent in Codec Avatars, which require both centralized and distributed processing to handle dynamically changing workloads. We propose a novel hardware resource and workload partitioning scheme optimized for these fluctuating demands. To complement this, we introduce an agile runtime scheduling system for efficient workload reallocation among computing units as needed, recognizing the limitations of static partitioning in rapidly evolving workload scenarios. Furthermore, our micro-architecture design incorporates unified computing modules and efficient hardware peripherals, enabling seamless workload balancing across the Codec Avatar processing pipeline. We evaluate the Re-CATA accelerators via on-board FPGA prototyping, comparing them to various baselines, including commercial AR/VR SoCs and academic accelerators. This evaluation demonstrates a maximum speedup of up to 5.95× under similar settings.

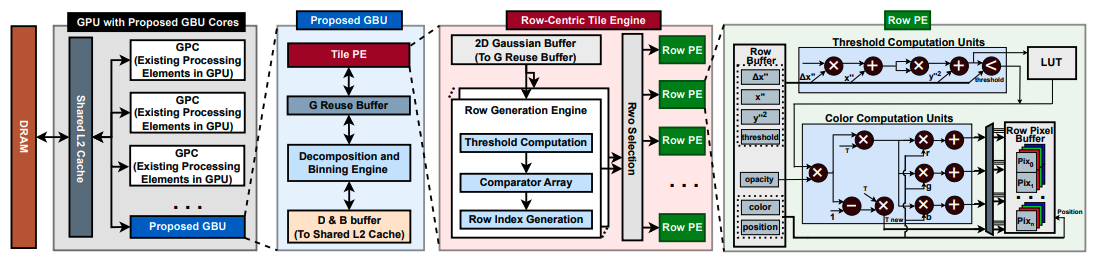

The rapidly advancing field of Augmented and Virtual Reality (AR/VR) demands real-time, photorealistic rendering on resource-constrained platforms. 3D Gaussian Splatting, delivering state-of-the-art (SOTA) performance in rendering efficiency and quality, has emerged as a promising solution across a broad spectrum of AR/VR applications. However, despite its effectiveness on high-end GPUs, it struggles on edge systems like the Jetson Orin NX Edge GPU, achieving only 7-17 FPS -- well below the over 60 FPS standard required for truly immersive AR/VR experiences. Addressing this challenge, we perform a comprehensive analysis of Gaussian-based AR/VR applications and identify the Gaussian Blending Stage, which intensively calculates each Gaussian's contribution at every pixel, as the primary bottleneck. In response, we propose a Gaussian Blending Unit (GBU), an edge GPU plug-in module for real-time rendering in AR/VR applications. Notably, our GBU can be seamlessly integrated into conventional edge GPUs and collaboratively supports a wide range of AR/VR applications. Specifically, GBU incorporates an intra-row sequential shading (IRSS) dataflow that shades each row of pixels sequentially from left to right, utilizing a two-step coordinate transformation. When directly deployed on a GPU, the proposed dataflow achieved a non-trivial 1.72x speedup on real-world static scenes, though still falls short of real-time rendering performance. Recognizing the limited compute utilization in the GPU-based implementation, GBU enhances rendering speed with a dedicated rendering engine that balances the workload across rows by aggregating computations from multiple Gaussians. Experiments across representative AR/VR applications demonstrate that our GBU provides a unified solution for on-device real-time rendering while maintaining SOTA rendering quality.

[NeurIPS 2024] 3D Gaussian Rendering Can Be Sparser: Efficient Rendering via Learned Fragment Pruning

Zhifan Ye, Chenxi Wan, Chaojian Li, Jihoon Hong, Sixu Li, Leshu Li, Yongan Zhang, Yingyan (Celine) Lin

[Abstract] [Paper] [Code]

Zhifan Ye, Chenxi Wan, Chaojian Li, Jihoon Hong, Sixu Li, Leshu Li, Yongan Zhang, Yingyan (Celine) Lin

[Abstract] [Paper] [Code]

3D Gaussian splatting has recently emerged as a promising technique for novel view synthesis from sparse image sets, yet comes at the cost of requiring millions of 3D Gaussian primitives to reconstruct each 3D scene. This largely limits its application to resource-constrained devices and applications. Despite advances in Gaussian pruning techniques that aim to remove individual 3D Gaussian primitives, the significant reduction in primitives often fails to translate into commensurate increases in rendering speed, impeding efficiency and practical deployment. We identify that this discrepancy arises due to the overlooked impact of fragment count per Gaussian (i.e., the number of pixels each Gaussian is projected onto). To bridge this gap and meet the growing demands for efficient on-device 3D Gaussian rendering, we propose fragment pruning, an orthogonal enhancement to existing pruning methods that can significantly accelerate rendering by selectively pruning fragments within each Gaussian. Our pruning framework dynamically optimizes the pruning threshold for each Gaussian, markedly improving rendering speed and quality. Extensive experiments in both static and dynamic scenes validate the effectiveness of our approach. For instance, by integrating our fragment pruning technique with state-of-the-art Gaussian pruning methods, we achieve up to a 1.71 speedup on an edge GPU device, the Jetson Orin NX, and enhance rendering quality by an average of 0.16 PSNR on the Tanks&Temples dataset. Our code is available at https://github.com/GATECH-EIC/Fragment-Pruning.

[NeurIPS 2024] AmoebaLLM: Constructing Any-Shape Large Language Models for Efficient and Instant Deployment

Yonggan Fu, Zhongzhi Yu, Junwei Li, Jiayi Qian, Yongan Zhang, Xiangchi Yuan, Dachuan Shi, Roman Yakunin, Yingyan (Celine) Lin

[Abstract] [Paper]

Yonggan Fu, Zhongzhi Yu, Junwei Li, Jiayi Qian, Yongan Zhang, Xiangchi Yuan, Dachuan Shi, Roman Yakunin, Yingyan (Celine) Lin

[Abstract] [Paper]

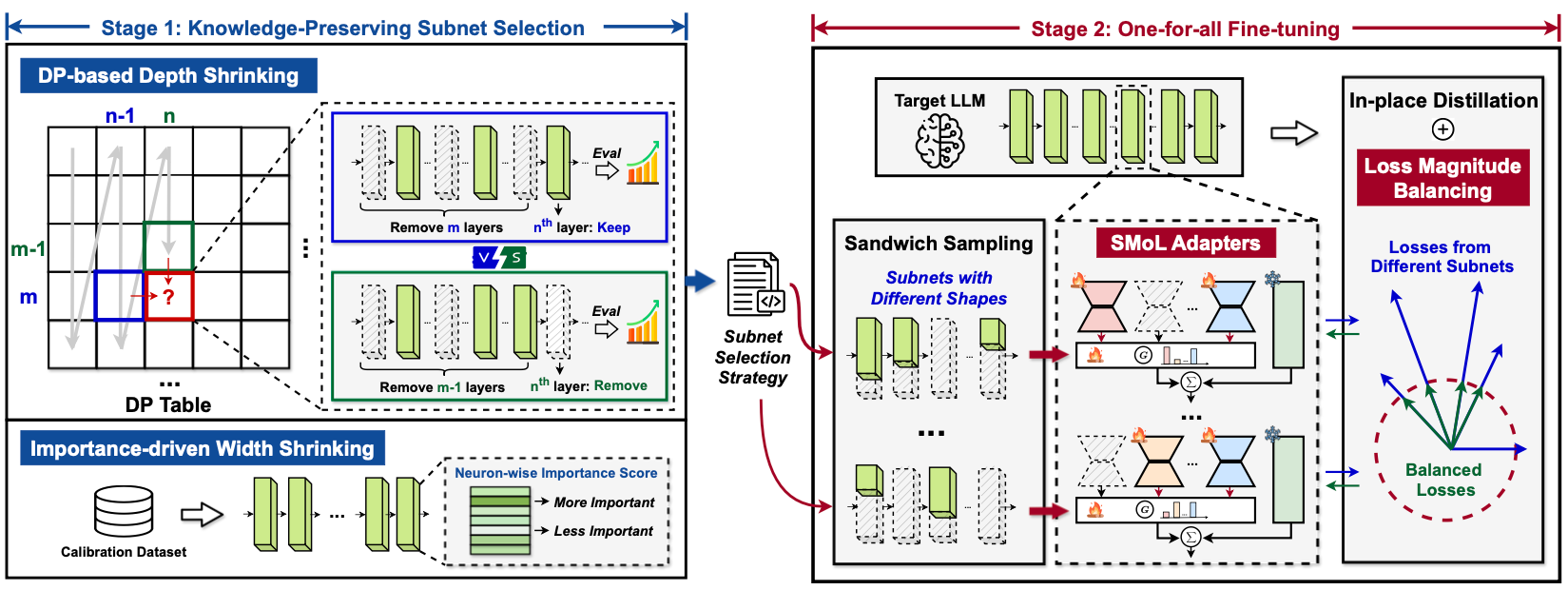

Motivated by the transformative capabilities of large language models (LLMs) across various natural language tasks, there has been a growing demand to deploy these models effectively across diverse real-world applications and platforms. However, the challenge of efficiently deploying LLMs has become increasingly pronounced due to the varying application-specific performance requirements and the rapid evolution of computational platforms, which feature diverse resource constraints and deployment flows. These varying requirements necessitate LLMs that can adapt their structures (depth and width) for optimal efficiency across different platforms and application specifications. To address this critical gap, we propose AmoebaLLM, a novel framework designed to enable the instant derivation of LLM subnets of arbitrary shapes, which achieve the accuracy-efficiency frontier and can be extracted immediately after a one-time fine-tuning. In this way, AmoebaLLM significantly facilitates rapid deployment tailored to various platforms and applications. Specifically, AmoebaLLM integrates three innovative components: (1) a knowledge-preserving subnet selection strategy that features a dynamic-programming approach for depth shrinking and an importance-driven method for width shrinking; (2) a shape-aware mixture of LoRAs to mitigate gradient conflicts among subnets during fine-tuning; and (3) an in-place distillation scheme with loss-magnitude balancing as the fine-tuning objective. Extensive experiments validate that AmoebaLLM not only sets new standards in LLM adaptability but also successfully delivers subnets that achieve state-of-the-art trade-offs between accuracy and efficiency.

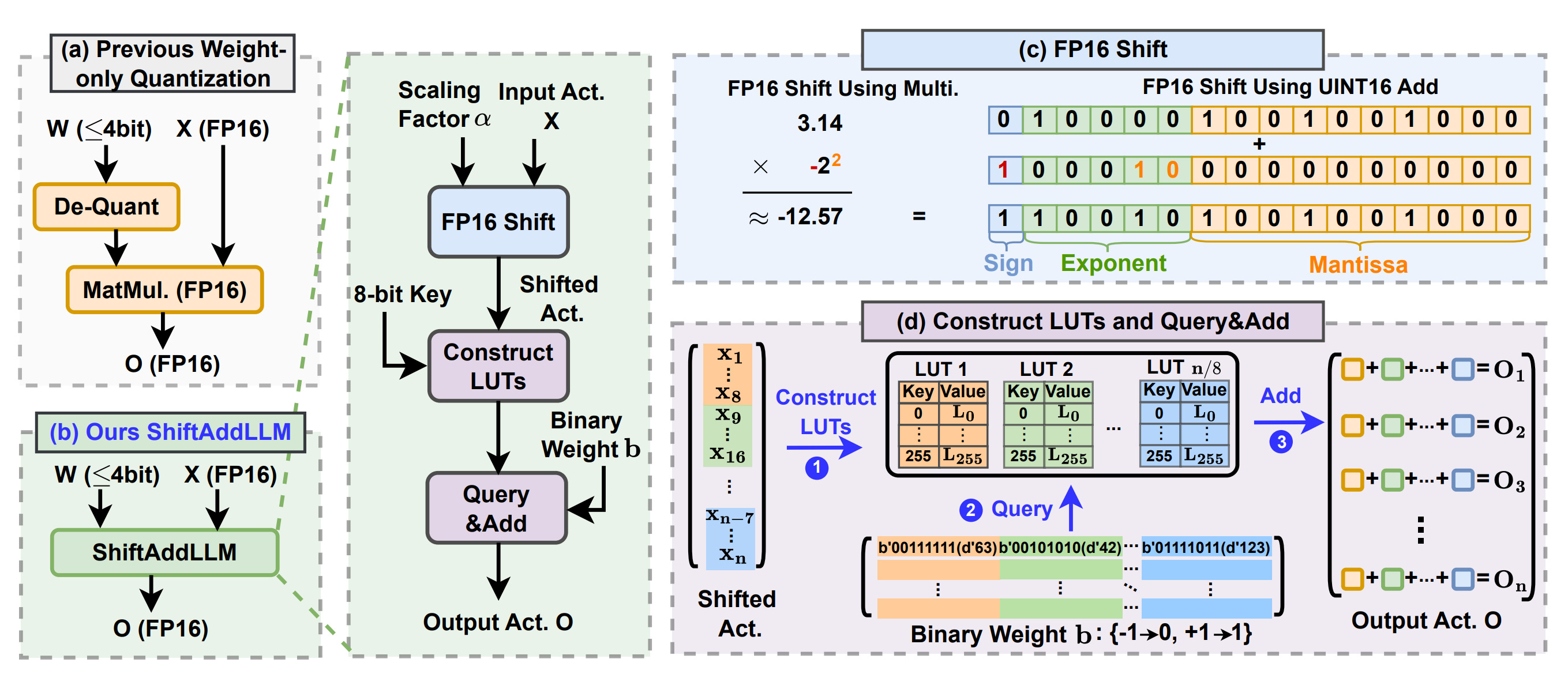

[NeurIPS 2024] ShiftAddLLM: Accelerating Pretrained LLMs via Post-Training Multiplication-Less Reparameterization

Haoran You, Yipin Guo, Yichao Fu, Wei Zhou, Huihong Shi, Xiaofan Zhang, Souvik Kundu, Amir Yazdanbakhsh, Yingyan (Celine) Lin

[Abstract] [Paper]

Haoran You, Yipin Guo, Yichao Fu, Wei Zhou, Huihong Shi, Xiaofan Zhang, Souvik Kundu, Amir Yazdanbakhsh, Yingyan (Celine) Lin

[Abstract] [Paper]

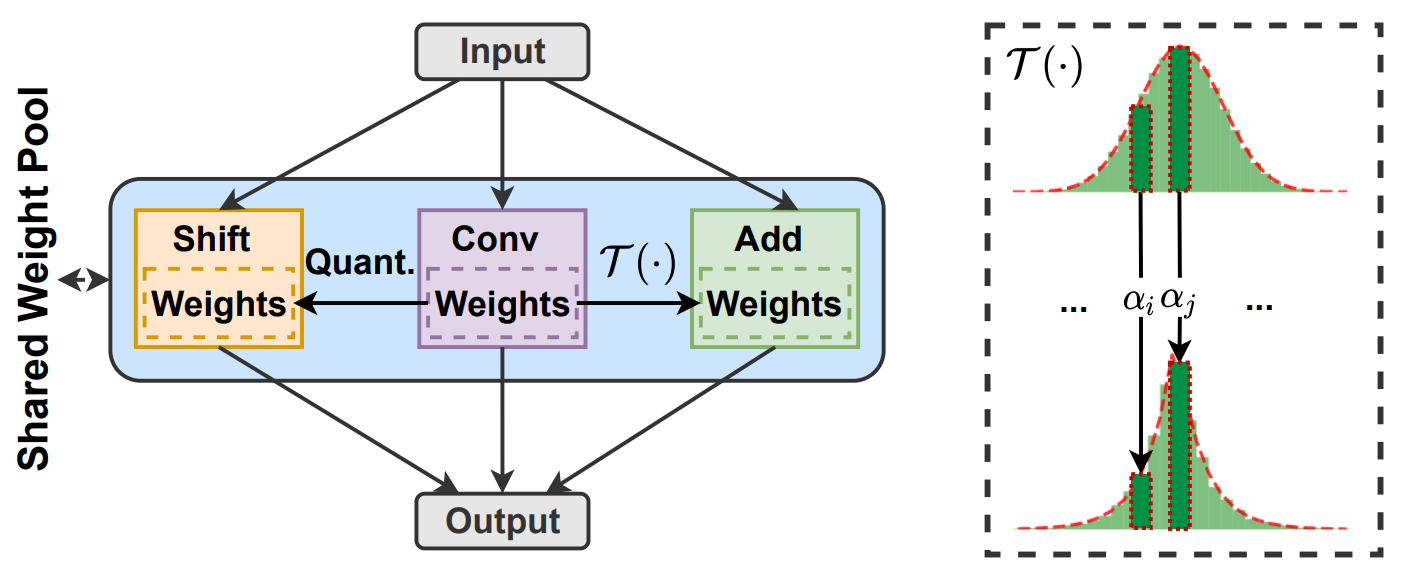

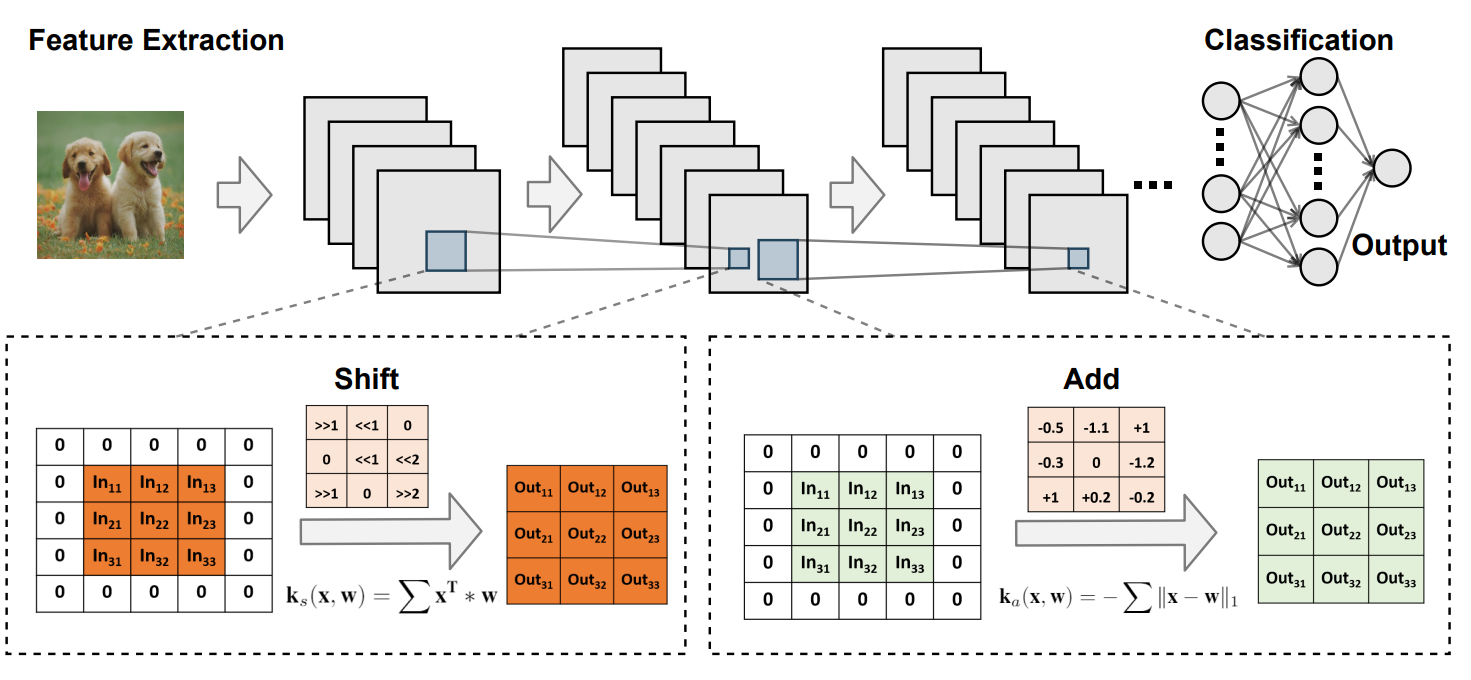

Large language models (LLMs) have shown impressive performance on language tasks but face challenges when deployed on resource-constrained devices due to their extensive parameters and reliance on dense multiplications, resulting in high memory demands and significant latency bottlenecks. Shift and add reparameterization offers a promising solution by replacing costly multiplications with hardware-friendly primitives in both the attention and multi-layer perceptron (MLP) layers of an LLM. However, current reparameterization techniques necessitate training from scratch or full parameter fine-tuning to restore accuracy, which is often resource-intensive for LLMs. To this end, we propose accelerating pretrained LLMs through a post-training shift and add reparameterization to yield efficient multiplication-less LLMs, dubbed ShiftAddLLM . Specifically, we quantize and reparameterize each weight matrix in LLMs into a series of binary matrices and associated scaling factor matrices. Each scaling factor, corresponding to a group of weights, is quantized to powers of two. This reparameterization transforms the original multiplications between weights and activations into two steps: (1) bitwise shifts between activations and scaling factors, and (2) queries and adds these results according to the binary matrices. Further, to reduce the accuracy drop, we present a multi-objective optimization method to minimize both weight and output activation reparameterization errors. Finally, based on the observation of varying sensitivity across layers to reparameterization, we develop an automated bit allocation strategy to further reduce memory usage and latency. Experiments on five LLM families and eight tasks consistently validate the effectiveness of ShiftAddLLM, achieving average perplexity improvements of 5.6 and 22.7 at comparable or even lower latency compared to the most competitive quantized LLMs at 3 and 2 bits, respectively, and more than 80% memory and energy reductions over the original LLMs.

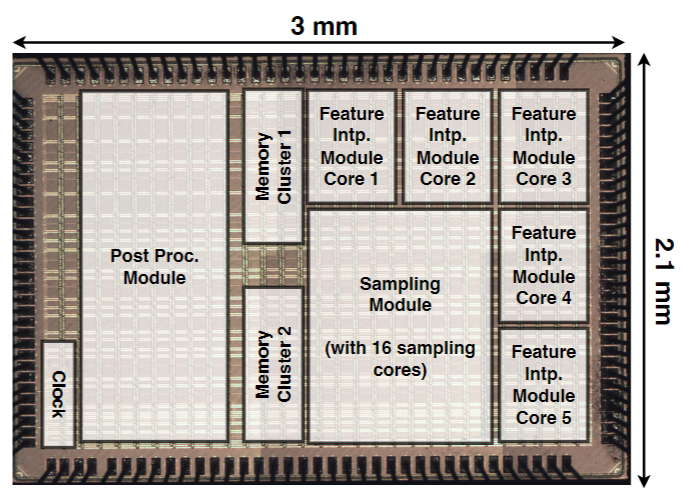

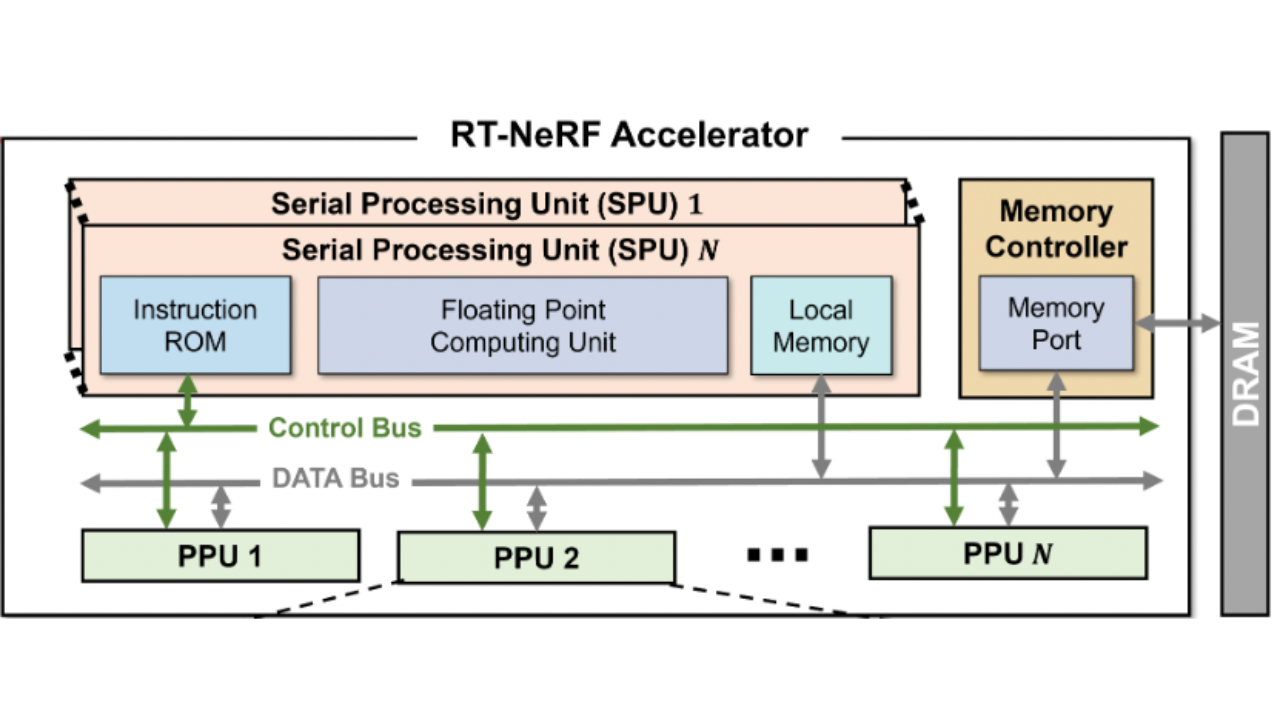

Recent breakthroughs in Neural Radiance Field (NeRF) based 3D reconstruction and rendering have spurred the possibility of immersive experiences in augmented and virtual reality (AR/VR). However, current NeRF acceleration techniques are still inadequate for real-world AR/VR applications due to: 1) the lack of end-to-end pipeline acceleration support, which causes impractical off-chip bandwidth demands for edge devices, and 2) limited scalability in handling large-scale scenes. To tackle these limitations, we have developed an end-to-end, scalable 3D acceleration framework called Fusion-3D, capable of instant scene reconstruction and real-time rendering. Fusion-3D achieves these goals through two key innovations: 1) an optimized end-to-end processor for all three stages of the NeRF pipeline, featuring dynamic scheduling and hardware-aware sampling in the first stage, and a shared, reconfigurable pipeline with mixed-precision arithmetic in the second and third stages; 2) a multi-chip archi-tecture for handling large-scale scenes, integrating a three-level hierarchical tiling scheme that minimizes inter-chip communica-tion and balances workloads across chips. Extensive experiments validate the effectiveness of Fusion-3D in facilitating real-time, energy-efficient 3D reconstruction and rendering. Specifically, we tape out a prototype chip in 28nm CMOS to evaluate the effectiveness of the proposed end-to-end processor. Extensive simulation based on the on-silicon measurements demonstrates a 2.5× and 6× throughput improvement in training and in-ference, respectively, compared to state-of-the-art accelerators. Furthermore, to assess the multi-chip architecture, we integrate four chips into a single PCB as a prototype. Further simulation results show that the multi-chip system achieves a 7.3× and 6.5× throughput improvement in training and inference, respectively, over the Nvidia 2080Ti GPU. To the best of our knowledge, Fusion-3D is the first to achieve both instant (≤ 2 seconds) 3D reconstruction and real-time (≥ 30 FPS) rendering, while only requiring the bandwidth of the most commonly used USB port (0.625 GB/s, 5 Gbps) in edge devices for off-chip communication.

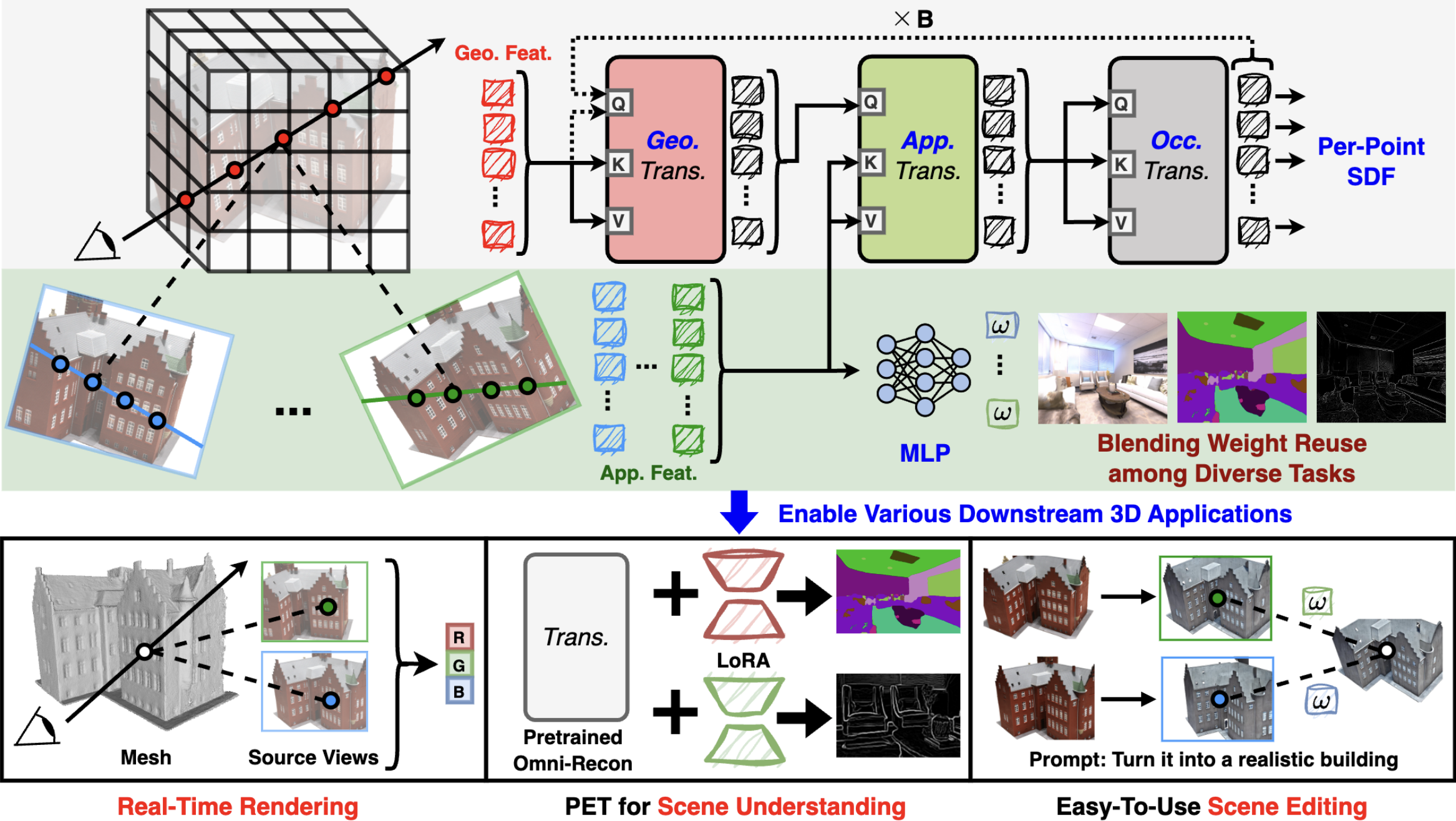

Recent breakthroughs in Neural Radiance Fields (NeRFs) have sparked significant demand for their integration into real-world 3D applications. However, the varied functionalities required by different 3D applications often necessitate diverse NeRF models with various pipelines, leading to tedious NeRF training for each target task and cumbersome trial-and-error experiments. Drawing inspiration from the generalization capability and adaptability of emerging foundation models, our work aims to develop one general-purpose NeRF for handling diverse 3D tasks. We achieve this by proposing a framework called Omni-Recon, which is capable of (1) generalizable 3D reconstruction and zero-shot multitask scene understanding, and (2) adaptability to diverse downstream 3D applications such as real-time rendering and scene editing. Our key insight is that an image-based rendering pipeline, with accurate geometry and appearance estimation, can lift 2D image features into their 3D counterparts, thus extending widely explored 2D tasks to the 3D world in a generalizable manner. Specifically, our Omni-Recon features a general-purpose NeRF model using image-based rendering with two decoupled branches: one complex transformer-based branch that progressively fuses geometry and appearance features for accurate geometry estimation, and one lightweight branch for predicting blending weights of source views. This design achieves state-of-the-art (SOTA) generalizable 3D surface reconstruction quality with blending weights reusable across diverse tasks for zero-shot multitask scene understanding. In addition, it can enable real-time rendering after baking the complex geometry branch into meshes, swift adaptation to achieve SOTA generalizable 3D understanding performance, and seamless integration with 2D diffusion models for text-guided 3D editing.

[TCASAI 2024]

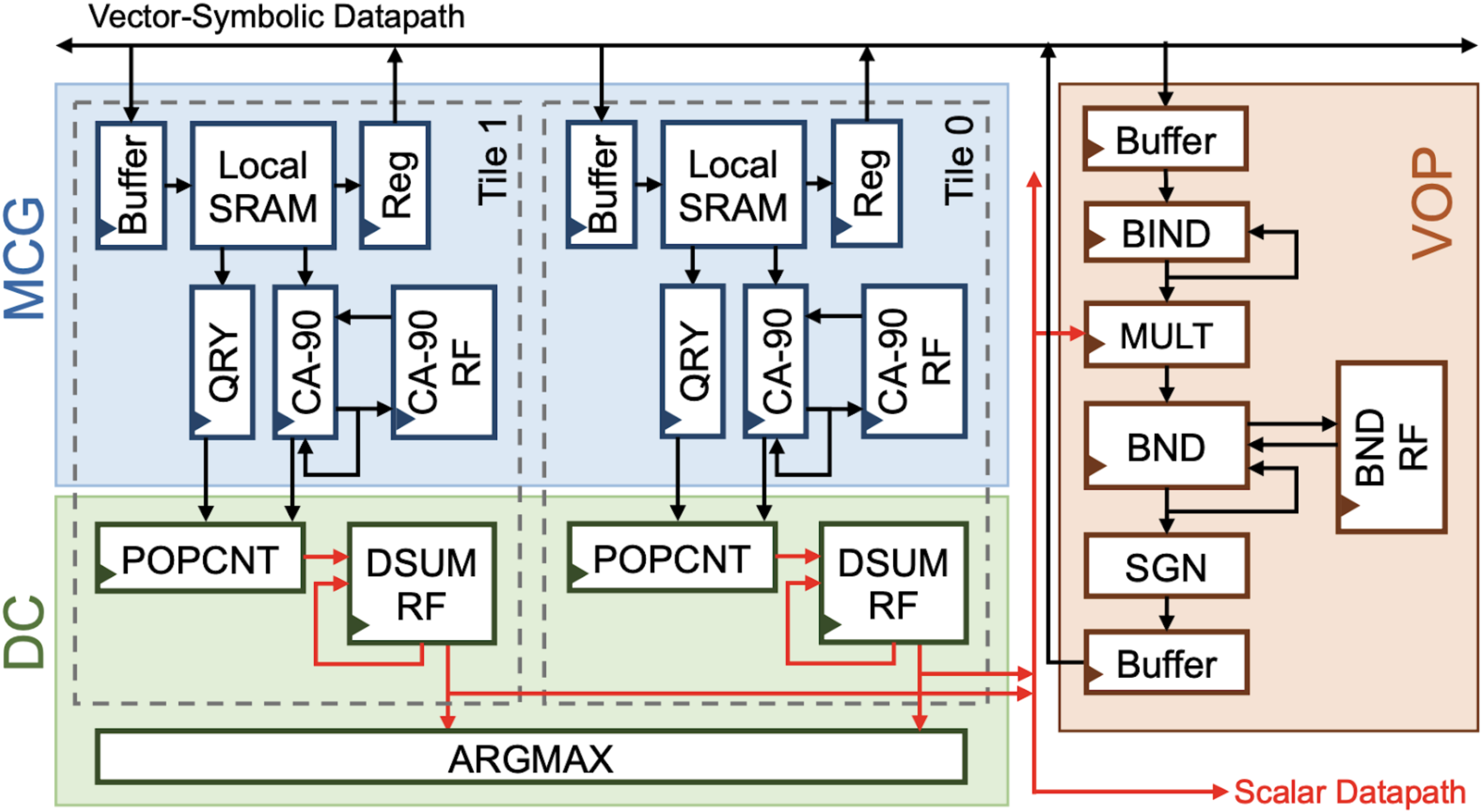

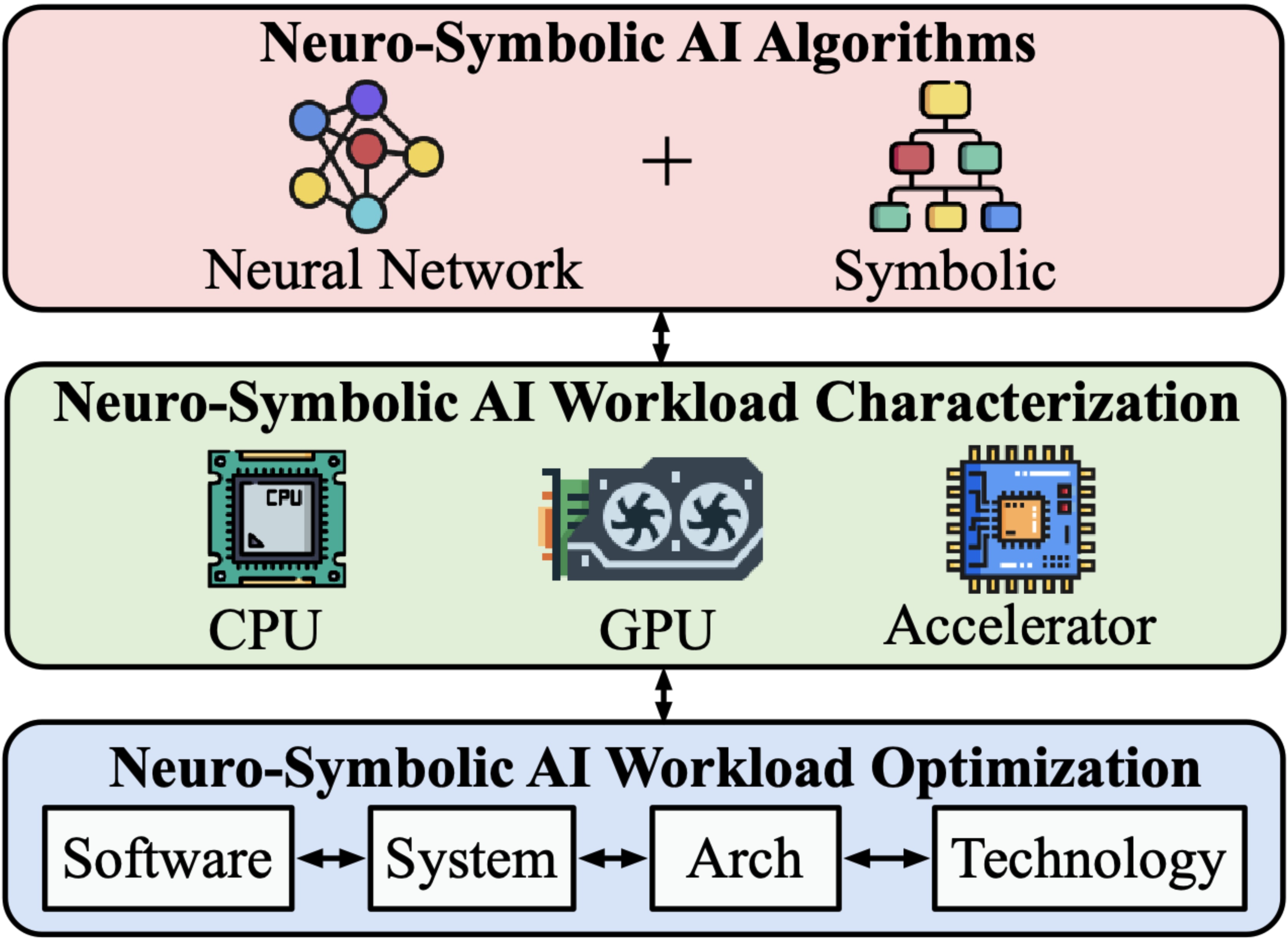

Towards Efficient Neuro-Symbolic AI: From Workload Characterization to Hardware Architecture

Zishen Wan, Che-Kai Liu, Hanchen Yang, Ritik Raj, Chaojian Li, Haoran You, Yonggan Fu, Cheng Wan, Sixu Li, Youbin Kim, Ananda Samajdar, Yingyan (Celine) Lin, Mohamed Ibrahim, Jan M. Rabaey, Tushar Krishna, and Arijit Raychowdhury

[Abstract]

Zishen Wan, Che-Kai Liu, Hanchen Yang, Ritik Raj, Chaojian Li, Haoran You, Yonggan Fu, Cheng Wan, Sixu Li, Youbin Kim, Ananda Samajdar, Yingyan (Celine) Lin, Mohamed Ibrahim, Jan M. Rabaey, Tushar Krishna, and Arijit Raychowdhury

[Abstract]

The remarkable advancements in artificial intelligence (AI), primarily driven by deep neural networks, are facing challenges surrounding unsustainable computational trajectories, limited robustness, and a lack of explainability. To develop next-generation cognitive AI systems, neuro-symbolic AI emerges as a promising paradigm, fusing neural and symbolic approaches to enhance interpretability, robustness, and trustworthiness, while facilitating learning from much less data. Recent neuro-symbolic systems have demonstrated great potential in collaborative human-AI scenarios with reasoning and cognitive capabilities. In this paper, we aim to understand the workload characteristics and potential architectures for neuro-symbolic AI. We first systematically categorize neuro-symbolic AI algorithms, and then experimentally evaluate and analyze them in terms of runtime, memory, computational operators, sparsity, and system characteristics on CPUs, GPUs, and edge SoCs. Our studies reveal that neuro-symbolic models suffer from inefficiencies on off-the-shelf hardware, due to the memory-bound nature of vector-symbolic and logical operations, complex flow control, data dependencies, sparsity variations, and limited scalability. Based on profiling insights, we suggest cross-layer optimization solutions and present a hardware acceleration case study for vector-symbolic architecture to improve the performance, efficiency, and scalability of neuro-symbolic computing. Finally, we discuss the challenges and potential future directions of neuro-symbolic AI from both system and architectural perspectives.

The remarkable advancements in artificial intelligence (AI), primarily driven by deep neural networks, are facing challenges surrounding unsustainable computational trajectories, limited robustness, and a lack of explainability. To develop next-generation cognitive AI systems, neuro-symbolic AI emerges as a promising paradigm, fusing neural and symbolic approaches to enhance interpretability, robustness, and trustworthiness, while facilitating learning from much less data. Recent neuro-symbolic systems have demonstrated great potential in collaborative human-AI scenarios with reasoning and cognitive capabilities. In this paper, we aim to understand the workload characteristics and potential architectures for neuro-symbolic AI. We first systematically categorize neuro-symbolic AI algorithms, and then experimentally evaluate and analyze them in terms of runtime, memory, computational operators, sparsity, and system characteristics on CPUs, GPUs, and edge SoCs. Our studies reveal that neuro-symbolic models suffer from inefficiencies on off-the-shelf hardware, due to the memory-bound nature of vector-symbolic and logical operations, complex flow control, data dependencies, sparsity variations, and limited scalability. Based on profiling insights, we suggest cross-layer optimization solutions to improve the performance, efficiency, and scalability of neuro-symbolic computing. Finally, we discuss the challenges and potential future directions of neuro-symbolic AI from both system and architectural perspectives.

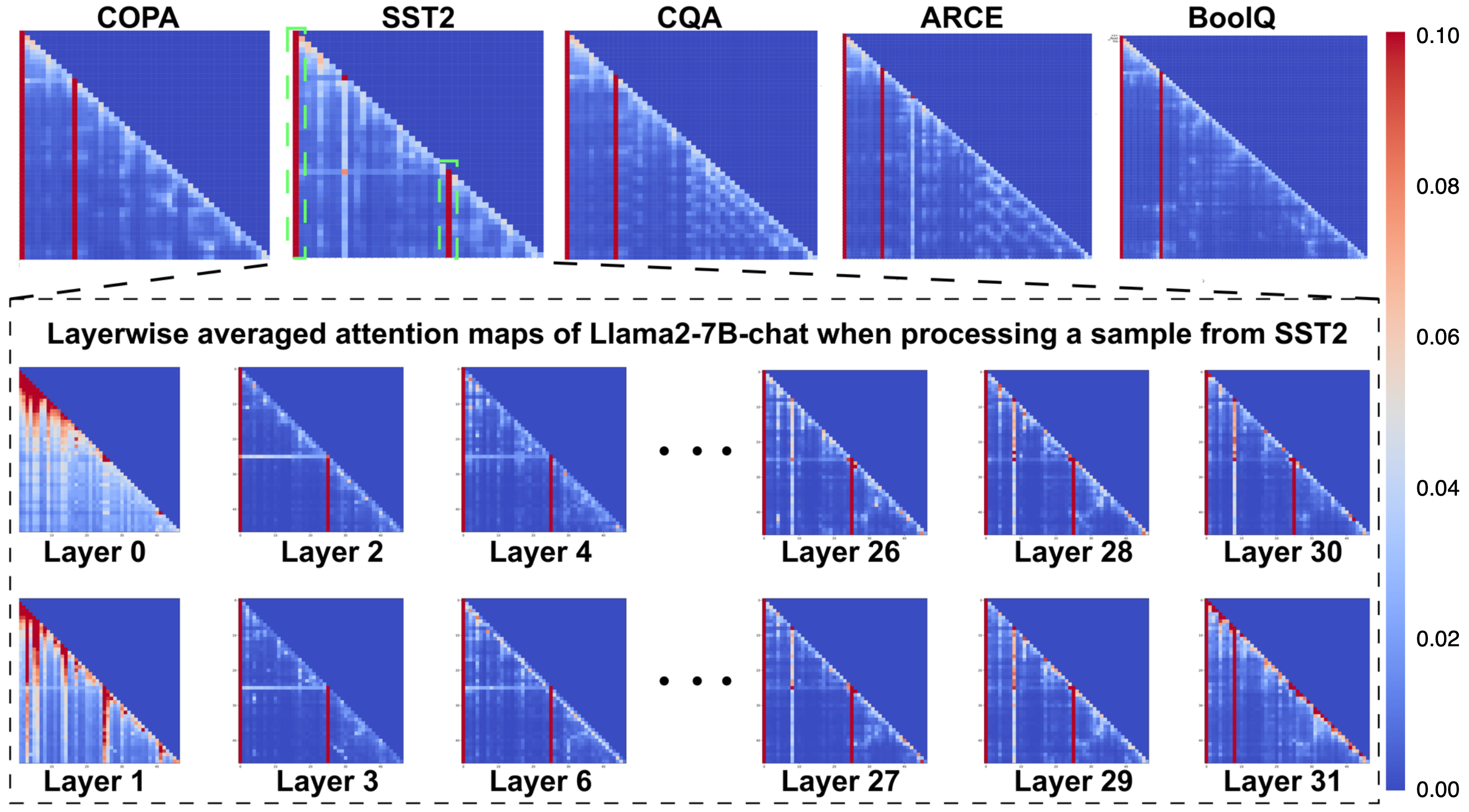

[ICML 2024] Unveiling and Harnessing Hidden Attention Sinks: Enhancing Large Language Models without Training through Attention Calibration

Zhongzhi Yu, Zheng Wang, Yonggan Fu, Shi Huihong, Khalid Shaikh, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video] [Project Page ]

Zhongzhi Yu, Zheng Wang, Yonggan Fu, Shi Huihong, Khalid Shaikh, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video] [Project Page ]

Attention is a fundamental component behind the remarkable achievements of large language models (LLMs). However, our current understanding of the attention mechanism, especially in terms of how attention distributions are established, remains limited. Inspired by recent studies that explore the presence of attention sinks in the initial tokens, which receive disproportionately large attention scores despite their lack of semantic importance, this work delves deeper into this phenomenon. We aim to provide a more profound understanding of the existence of attention sinks within LLMs and to uncover ways to enhance LLMs' achievable accuracy by directly optimizing the attention distributions, without the need for weight finetuning. Specifically, this work begins with comprehensive visualizations of the attention distributions in LLMs during inference across various inputs and tasks. Based on these visualizations, for the first time, we discover that (1) attention sinks occur not only at the start of sequences but also within later tokens of the input, and (2) not all attention sinks have a positive impact on the achievable accuracy of LLMs. Building upon our findings, we propose a training-free Attention Calibration (ACT) technique that automatically optimizes the attention distributions on the fly during inference in an input-adaptive manner. Through extensive experiments, we demonstrate that our proposed ACT technique can enhance the accuracy of the pretrained Llama2-7B-chat by up to 3.16% across various tasks. The source code will be released upon acceptance.

[ICML 2024] When Linear Attention Meets Autoregressive Decoding: Towards More Effective and Efficient Linearized Large Language Models

Haoran You, Yichao Fu, Zheng Wang, Amir Yazdanbakhsh, and Yingyan (Celine) Lin

[Abstract]

Haoran You, Yichao Fu, Zheng Wang, Amir Yazdanbakhsh, and Yingyan (Celine) Lin

[Abstract]

Autoregressive Large Language Models (LLMs) have achieved impressive performance in language tasks but face significant bottlenecks: (1) quadratic complexity bottleneck in the attention module with increasing token numbers, and (2) efficiency bottleneck due to the sequential processing nature of autoregressive LLMs during generation. Linear attention and speculative decoding emerge as solutions for these challenges, yet their applicability and combinatory potential for autoregressive LLMs remain uncertain. To this end, we embark on the first comprehensive empirical investigation into the efficacy of existing linear attention methods for autoregressive LLMs and their integration with speculative decoding. We introduce an augmentation technique for linear attention and ensure the compatibility between linear attention and speculative decoding for efficient LLM training and serving. Extensive experiments and ablation studies on seven existing linear attention works and five encoder/decoder-based LLMs consistently validate the effectiveness of our augmented linearized LLMs, e.g., achieving up to a 6.67 perplexity reduction on LLaMA and 2× speedups during generation as compared to prior linear attention methods.

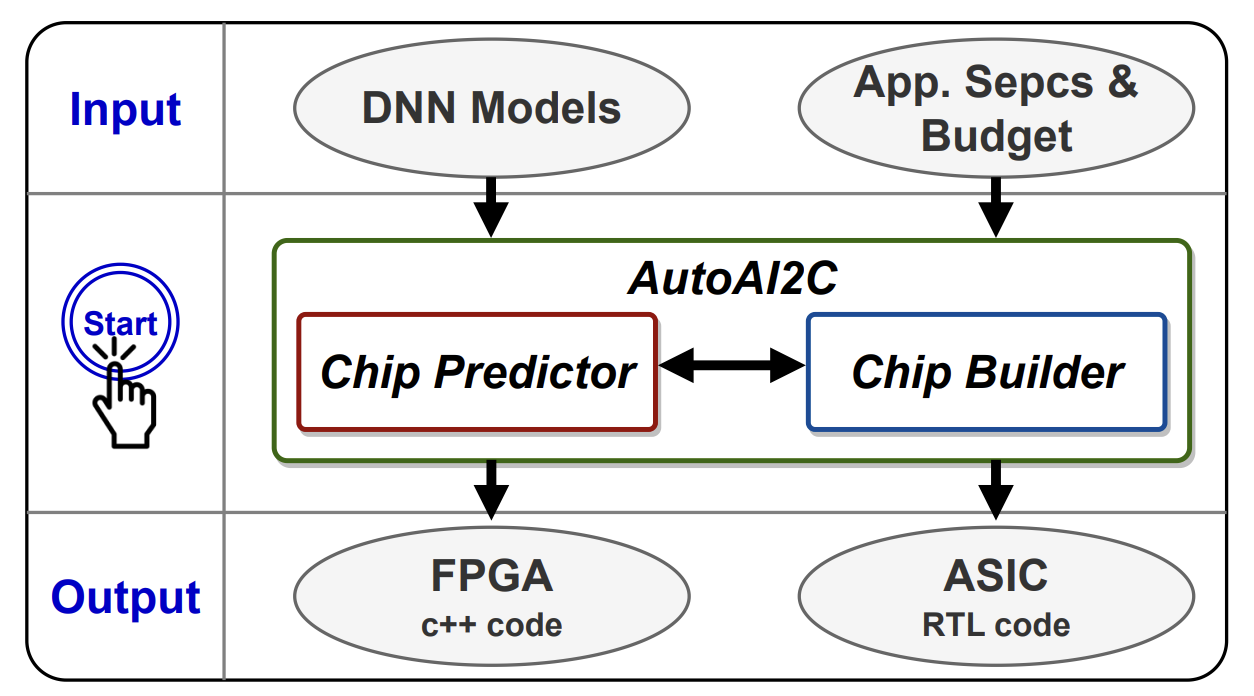

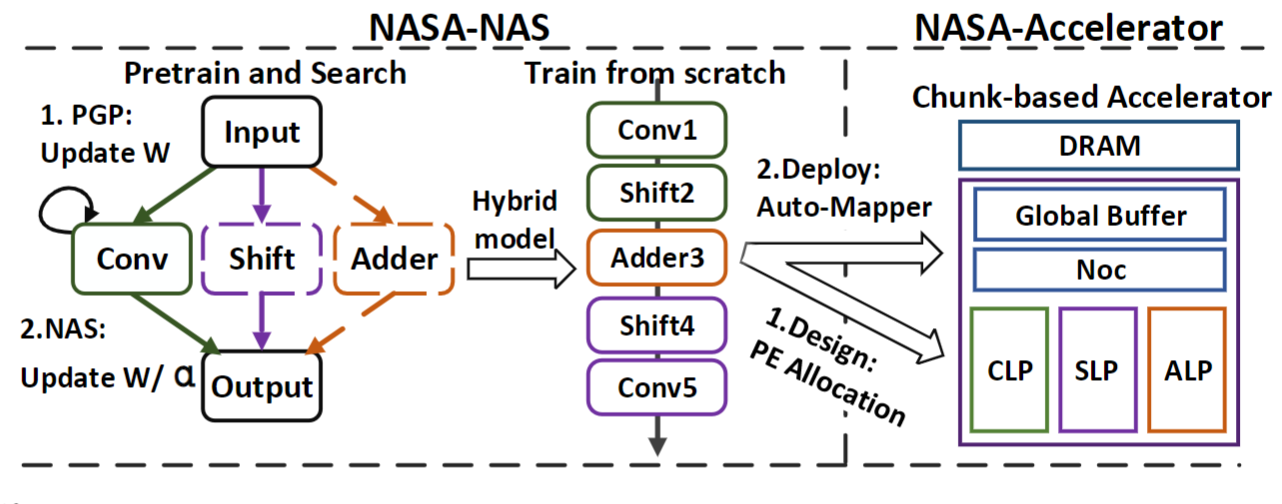

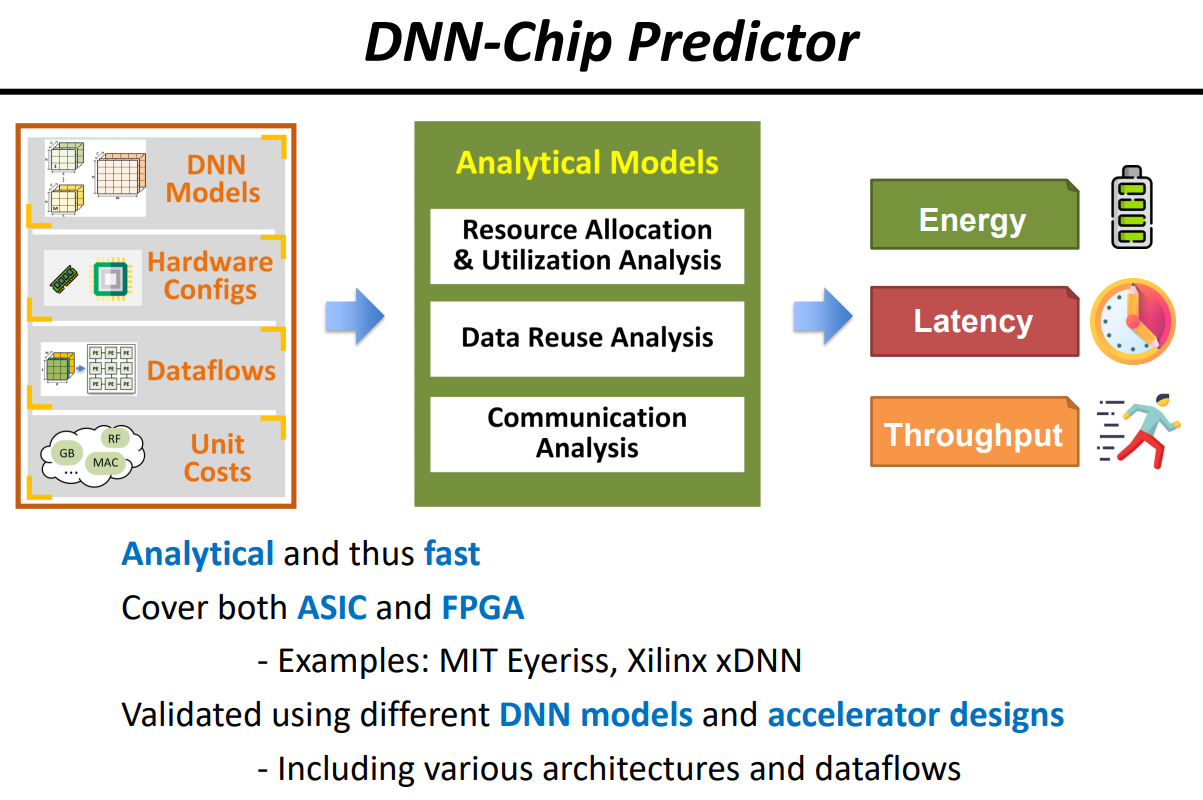

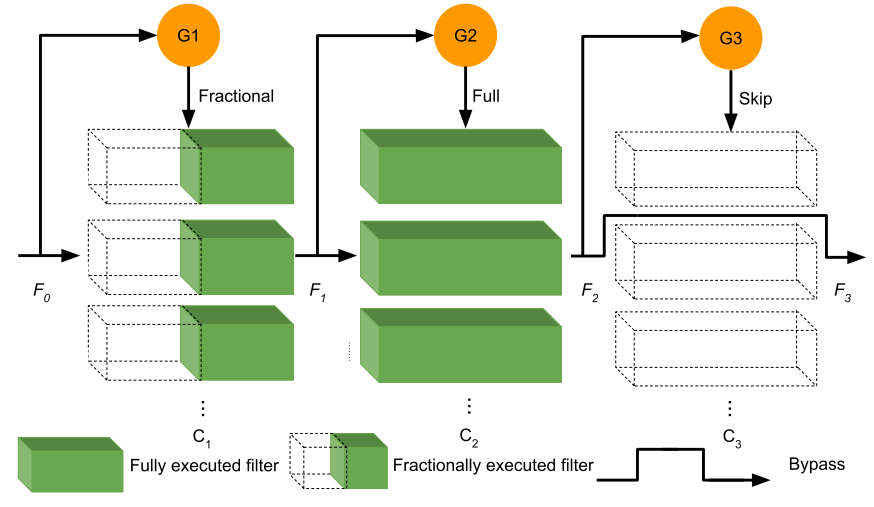

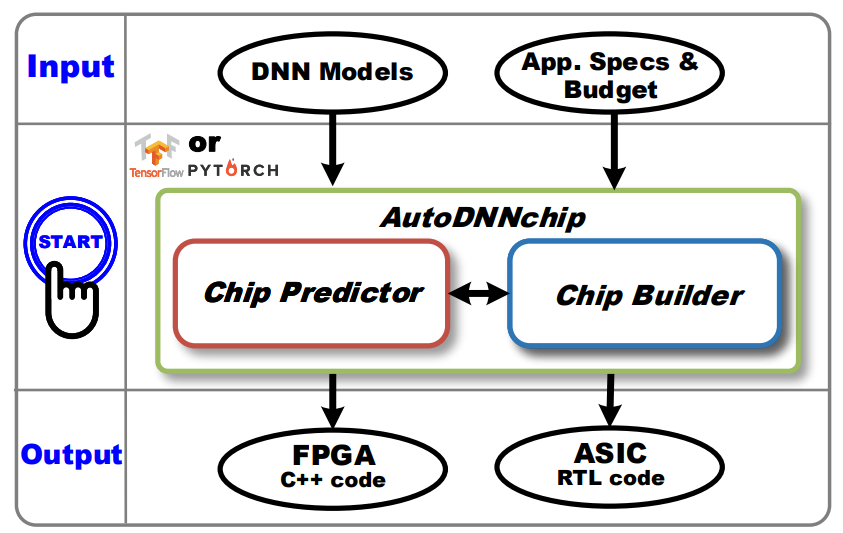

Recent advancements in Deep Neural Networks (DNNs) and the slowing of Moore’s law have made domain-specific hardware accelerators for DNNs (i.e., DNN chips) a promising means for enabling more extensive DNN applications. However, designing DNN chips is challenging due to (1) the vast and non-standardized design space and (2) different DNN models’ varying performance preferences regarding hardware micro-architecture and dataflows. Therefore, designing a DNN chip often takes a large team of inter-disciplinary experts months to years. To enable flexible and efficient DNN chip design, we propose AutoAI2C: a DNN chip generator that can automatically generate both FPGA-and ASIC-based DNN accelerator implementation (i.e., synthesizable hardware and deployment code) with optimized algorithm-to-hardware mapping, given the DNN model specification from mainstream machine learning frameworks (e.g., PyTorch). Specifically, AutoAI2C consists of two major components: (1) a Chip Predictor, which can efficiently and reliably predict a DNN accelerator’s energy, latency, and resource consumption using the proposed graph-based intermediate accelerator representation and (2) a Chip Builder, which can generate and optimize DNN accelerator designs by automatically exploring the design space based on targeting metrics and the Chip Predictor’s performance feedback. Extensive experiments show that our Chip Predictor’s predictions differ by 10% from real-measured ones. Furthermore, AutoAI2C generated accelerators can achieve performance comparable to or better than state-of-the-art accelerators, achieving up to a 2.12× throughput improvements or 2.4× latency reduction with the same level of hardware resource usage, or reducing energy consumption by up to 1.6×, when running the same DNN workloads.

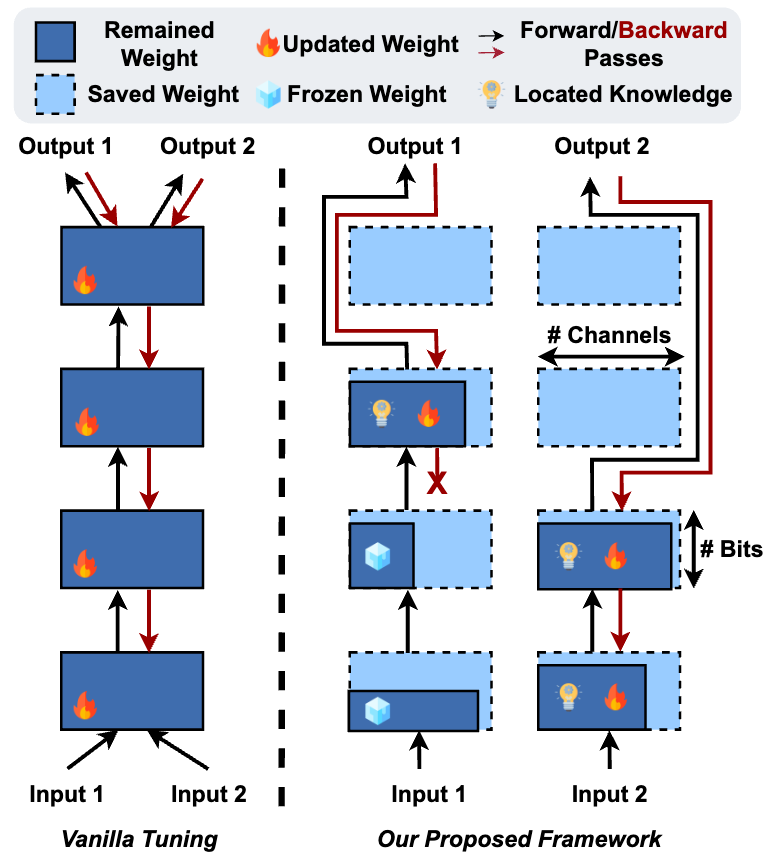

[DAC 2024] EDGE-LLM: Enabling Efficient Large Language Model Adaptation on Edge Devices via Unified Compression and Adaptive Layer Voting

Zhongzhi Yu, Zheng Wang, Yuhan Li, Ruijie Gao, Xiaoya Zhou, Sreenidhi Reddy Bommu, Yang (Katie) Zhao, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video] [Project Page ]

Zhongzhi Yu, Zheng Wang, Yuhan Li, Ruijie Gao, Xiaoya Zhou, Sreenidhi Reddy Bommu, Yang (Katie) Zhao, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video] [Project Page ]

Efficiently adapting Large Language Models (LLMs) on resource constrained devices, such as edge GPUs, is vital for applications requiring continuous and privacy-preserving adaptation. However, existing solutions fall short due to the high memory and computational overhead associated with LLMs. To address this, we introduce an LLM tuning framework, Edge-LLM, that features three core components: (1) a layerwise unified compression (LUC) method to reduce computation, offering cost-effective layer-wise pruning ratios and quantization bit-precision policies, (2) an adaptive layer tuning \& voting scheme to reduce memory consumption, that selectively adjusts a subset of layers during each iteration and then adaptively combines their outputs for the final inference, thus reducing backpropagation depth and memory overhead during adaptation, and (3) a complementary hardware scheduling search space that optimizes hardware workload and utilization. Extensive experiments demonstrate that Edge-LLM achieves efficient on-device adaptation with comparable task accuracy as vanilla tuning methods with a 2.92x speed up in each training iteration.

[DAC 2024] 3D-Carbon: An Analytical Carbon Modeling Tool for 3D and 2.5D Integrated Circuits

Yujie Zhao, Yang (Katie) Zhao, Cheng Wan, and Yingyan (Celine) Lin

[Abstract]

Yujie Zhao, Yang (Katie) Zhao, Cheng Wan, and Yingyan (Celine) Lin

[Abstract]

Environmental sustainability is crucial for Integrated Circuits (ICs) across their lifecycle, particularly in manufacturing and use. Meanwhile, ICs using 3D/2.5D integration technologies have emerged as promising solutions to meet the growing demands for computational power. However, there is a distinct lack of carbon modeling tools for 3D/2.5D ICs. Addressing this, we propose 3D-Carbon, an analytical carbon modeling tool designed to quantify the carbon emissions of 3D/2.5D ICs throughout their life cycle. 3D-Carbon factors in both potential savings and overheads from advanced integration technologies, considering practical deployment constraints like bandwidth. We validate 3D-Carbon’s accuracy against established baselines and illustrate its utility through case studies in autonomous vehicles. We believe that 3D-Carbon lays the initial foundation for future innovations in developing environmentally sustainable 3D/2.5D ICs.

[3DV 2024] MixRT: Mixed Neural Representations For Real-Time NeRF Rendering

Chaojian Li, Bichen Wu, Peter Vajda, and Yingyan (Celine) Lin

[Abstract] [Paper] [Demo] [Project Page]

Chaojian Li, Bichen Wu, Peter Vajda, and Yingyan (Celine) Lin

[Abstract] [Paper] [Demo] [Project Page]

Neural Radiance Field (NeRF) has emerged as a leading technique for novel view synthesis, owing to its impressive photorealistic reconstruction and rendering capability. Nevertheless, achieving real-time NeRF rendering in large-scale scenes has presented challenges, often leading to the adoption of either intricate baked mesh representations with a substantial number of triangles or resource-intensive ray marching in baked representations. We challenge these conventions, observing that high-quality geometry that is represented by meshes with substantial triangles, is not necessary for achieving photorealistic rendering quality. Consequently, we propose MixRT, a novel NeRF representation, that includes a low-quality mesh, a view-dependent-displacement map, and a compressed NeRF model. This design effectively harnesses the capabilities of existing graphics hardware, thus enabling real-time NeRF rendering on edge devices. Leveraging a highly-optimized WebGL-based rendering framework, our proposed MixRT attains real-time rendering speeds on edge devices (>30 FPS at a resolution of 1280 x 720 on a Macbook M1 Pro laptop), betster rendering quality (0.2 PSNR higher on indoor scenes of the Unbounded-360 datasets), and smaller storage (80%) compared to SotA methods.

[NeurIPS 2023] ShiftAddViT: Mixture of Multiplication Primitives Towards Efficient Vision Transformer

Haoran You, Huihong Shi, Yipin Guo, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code]

Haoran You, Huihong Shi, Yipin Guo, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code]

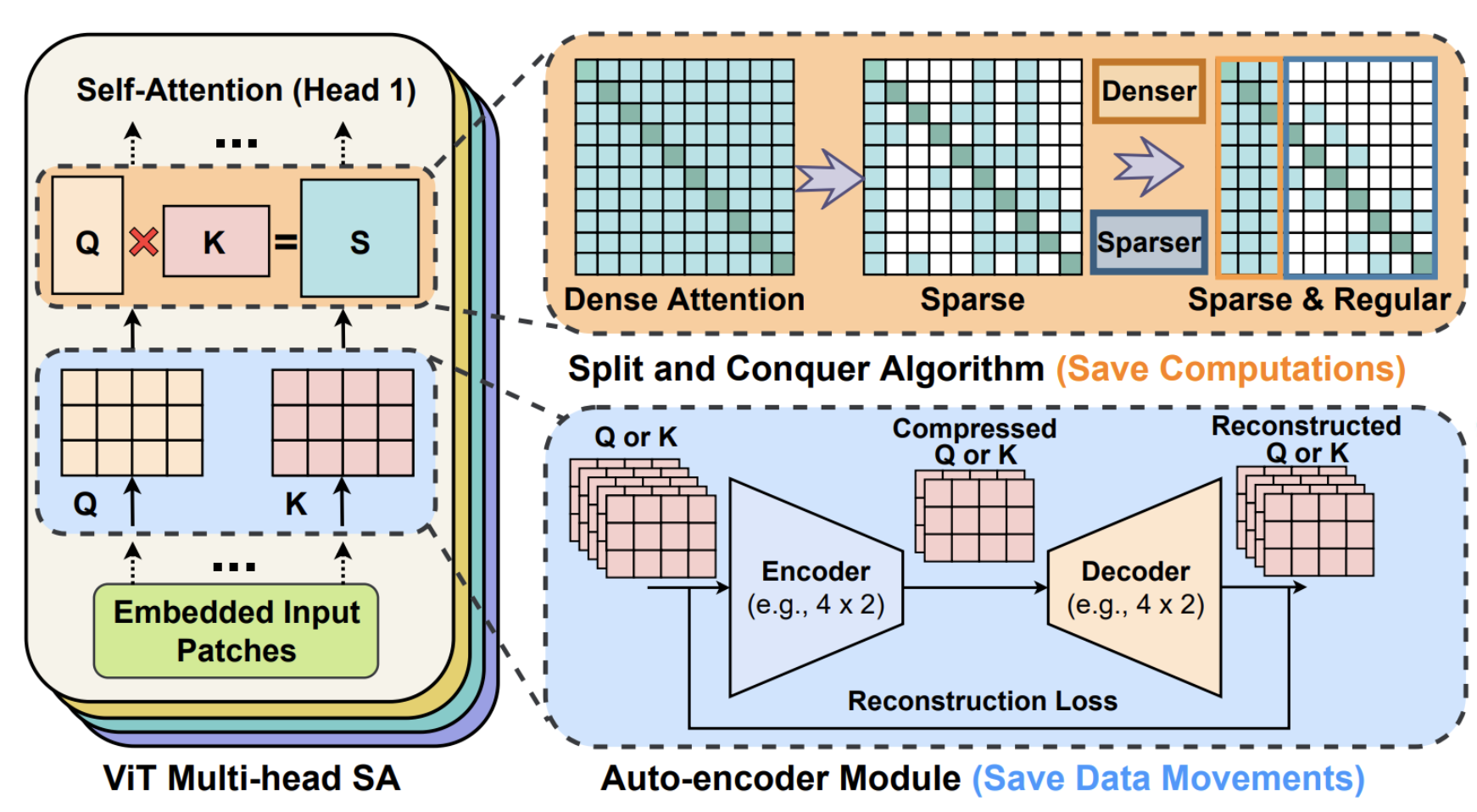

Vision Transformers (ViTs) have shown impressive performance and have become a unified backbone for multiple vision tasks. But both attention and multi-layer perceptions (MLPs) in ViTs are not efficient enough due to dense multiplications, resulting in costly training and inference. To this end, we propose to reparameterize the pre-trained ViT with a mixture of multiplication primitives, e.g., bitwise shifts and additions, towards a new type of multiplication-reduced model, dubbed ShiftAddViT, which aims for end-to-end inference speedups on GPUs without the need of training from scratch. Specifically, all 𝙼𝚊𝚝𝙼𝚞𝚕𝚜 among queries, keys, and values are reparameterized by additive kernels, after mapping queries and keys to binary codes in Hamming space. The remaining MLPs or linear layers are then reparameterized by shift kernels. We utilize TVM to implement and optimize those customized kernels for practical hardware deployment on GPUs. We find that such a reparameterization on (quadratic or linear) attention maintains model accuracy, while inevitably leading to accuracy drops when being applied to MLPs. To marry the best of both worlds, we further propose a new mixture of experts (MoE) framework to reparameterize MLPs by taking multiplication or its primitives as experts, e.g., multiplication and shift, and designing a new latency-aware load-balancing loss. Such a loss helps to train a generic router for assigning a dynamic amount of input tokens to different experts according to their latency. In principle, the faster experts run, the larger amount of input tokens are assigned. Extensive experiments consistently validate the effectiveness of our proposed ShiftAddViT, achieving up to 5.18× latency reductions on GPUs and 42.9% energy savings, while maintaining comparable accuracy as original or efficient ViTs.

[IEEE Micro 2023] NetDistiller: Empowering Tiny Deep Learning via In-Situ Distillation

Shunyao Zhang, Yonggan Fu, Shang Wu, Jyotikrishna Dass, Haoran You, and Yingyan (Celine) Lin

[Abstract]

Shunyao Zhang, Yonggan Fu, Shang Wu, Jyotikrishna Dass, Haoran You, and Yingyan (Celine) Lin

[Abstract]

Boosting the task accuracy of tiny neural networks (TNNs) has become a fundamental challenge for enabling the deployments of TNNs on edge devices which are constrained by strict limitations in terms of memory, computation, bandwidth, and power supply. To this end, we propose a framework called NetDistiller to boost the achievable accuracy of TNNs by treating them as sub-networks of a weight-sharing teacher constructed by expanding the number of channels of the TNN. Specifically, the target TNN model is jointly trained with the weight-sharing teacher model via (1) gradient surgery to tackle the gradient conflicts between them and (2) uncertainty-aware distillation to mitigate the overfitting of the teacher model. Extensive experiments across diverse tasks validate NetDistiller’s effectiveness in boosting TNNs’ achievable accuracy over state-of-the-art methods.

[ICCAD 2023] GPT4AIGChip: Towards Next-Generation AI Accelerator Design Automation via Large Language Models

Yonggan Fu*, Yongan Zhang*, Zhongzhi Yu*, Sixu Li, Zhifan Ye, Chaojian Li, Cheng Wan, and Yingyan (Celine) Lin

[Abstract] [Paper]

Yonggan Fu*, Yongan Zhang*, Zhongzhi Yu*, Sixu Li, Zhifan Ye, Chaojian Li, Cheng Wan, and Yingyan (Celine) Lin

[Abstract] [Paper]

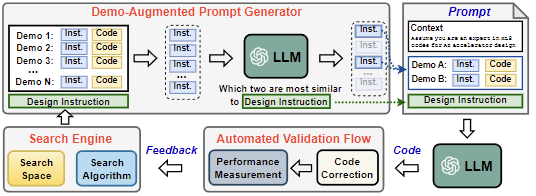

The remarkable capabilities and intricate nature of Artificial Intelligence (AI) have dramatically escalated the imperative for specialized AI accelerators. Nonetheless, designing these accelerators for various AI workloads remains both labor- and time-intensive. While existing design exploration and automation tools can partially alleviate the need for extensive human involvement, they still demand substantial hardware expertise, posing a barrier to non-experts and stifling AI accelerator development. Motivated by the astonishing potential of large language models (LLMs) for generating high-quality content in response to human language instructions, we embark on this work to examine the possibility of harnessing LLMs to automate AI accelerator design. Through this endeavor, we develop GPT4AIGChip, a framework intended to democratize AI accelerator design by leveraging human natural languages instead of domain-specific languages. Specifically, we first perform an in-depth investigation into LLMs’ limitations and capabilities for AI accelerator design, thus aiding our understanding of our current position and garnering insights into LLM-powered automated AI accelerator design. Furthermore, drawing inspiration from the above insights, we develop the GPT4AIGChip framework, which features an automated demo-augmented prompt-generation pipeline utilizing in-context learning to guide LLMs towards creating high-quality AI accelerator design. To our knowledge, this work is the first to demonstrate an effective pipeline for LLM-powered automated AI accelerator generation. Accordingly, we anticipate that our insights and framework can serve as a catalyst for innovations in next-generation LLM-powered design automation tools.

[TECS 2023] An Investigation on Hardware-Aware Vision Transformer Scaling

Chaojian Li, Kyungmin Kim, Bichen Wu, Peizhao Zhang, Hang Zhang, Xiaoliang Dai, Peter Vajda, and Yingyan (Celine) Lin

[Abstract] [Paper]

Chaojian Li, Kyungmin Kim, Bichen Wu, Peizhao Zhang, Hang Zhang, Xiaoliang Dai, Peter Vajda, and Yingyan (Celine) Lin

[Abstract] [Paper]

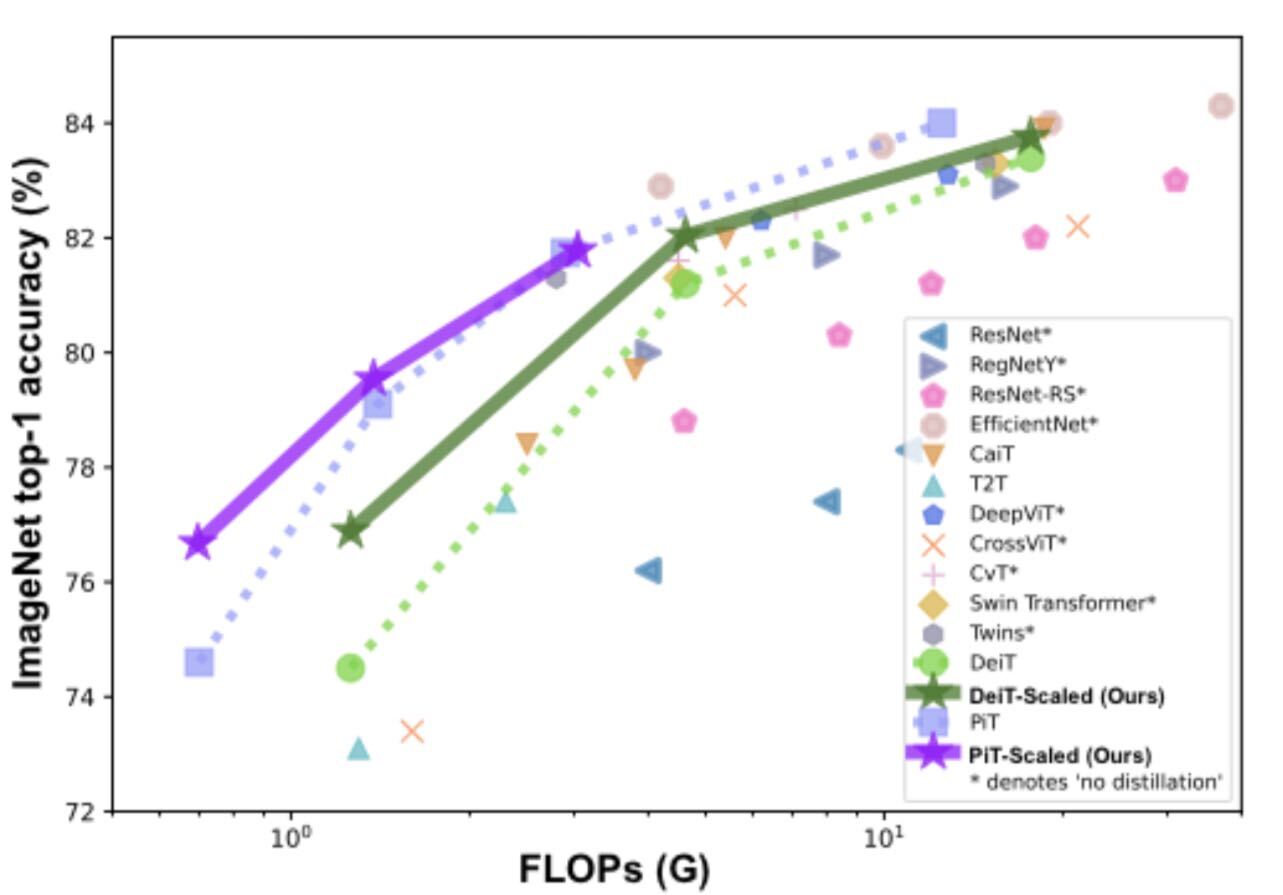

Vision Transformer (ViT) has demonstrated promising performance in various computer vision tasks, and recently attracted a lot of research attention. Many recent works have focused on proposing new architectures to improve ViT and deploying it into real-world applications. However, little effort has been made to analyze and understand ViT’s architecture design space and its implication of hardware-cost on different devices. In this work, by simply scaling ViT's depth, width, input size, and other basic configurations, we show that a scaled vanilla ViT model without bells and whistles can achieve comparable or superior accuracy-efficiency trade-off than most of the latest ViT variants. Specifically, compared to DeiT-Tiny, our scaled model achieves a +1.9% higher ImageNet top-1 accuracy under the same FLOPs and a +3.7% better ImageNet top-1 accuracy under the same latency on an NVIDIA Edge GPU TX2. Motivated by this, we further investigate the extracted scaling strategies from the following two aspects: (1) can these scaling strategies be transferred across different real hardware devices; and (2) can these scaling strategies be transferred to different ViT variants and tasks? For (1), our exploration, based on various devices with different resource budgets, indicates that the transferability effectiveness depends on the underlying device together with its corresponding deployment tool; for (2), we validate the effective transferability of the aforementioned scaling strategies obtained from a vanilla ViT model on top of an image classification task to the PiT model, a strong ViT variant targeting efficiency, as well as object detection and video classification tasks. In particular, when transferred to PiT, our scaling strategies lead to a boosted ImageNet top-1 accuracy of from 74.6% to 76.7% (+2.1%) under the same 0.7G FLOPs; and when transferred to the COCO object detection task, the average precision is boosted by +0.7% under a similar throughput on a V100 GPU.

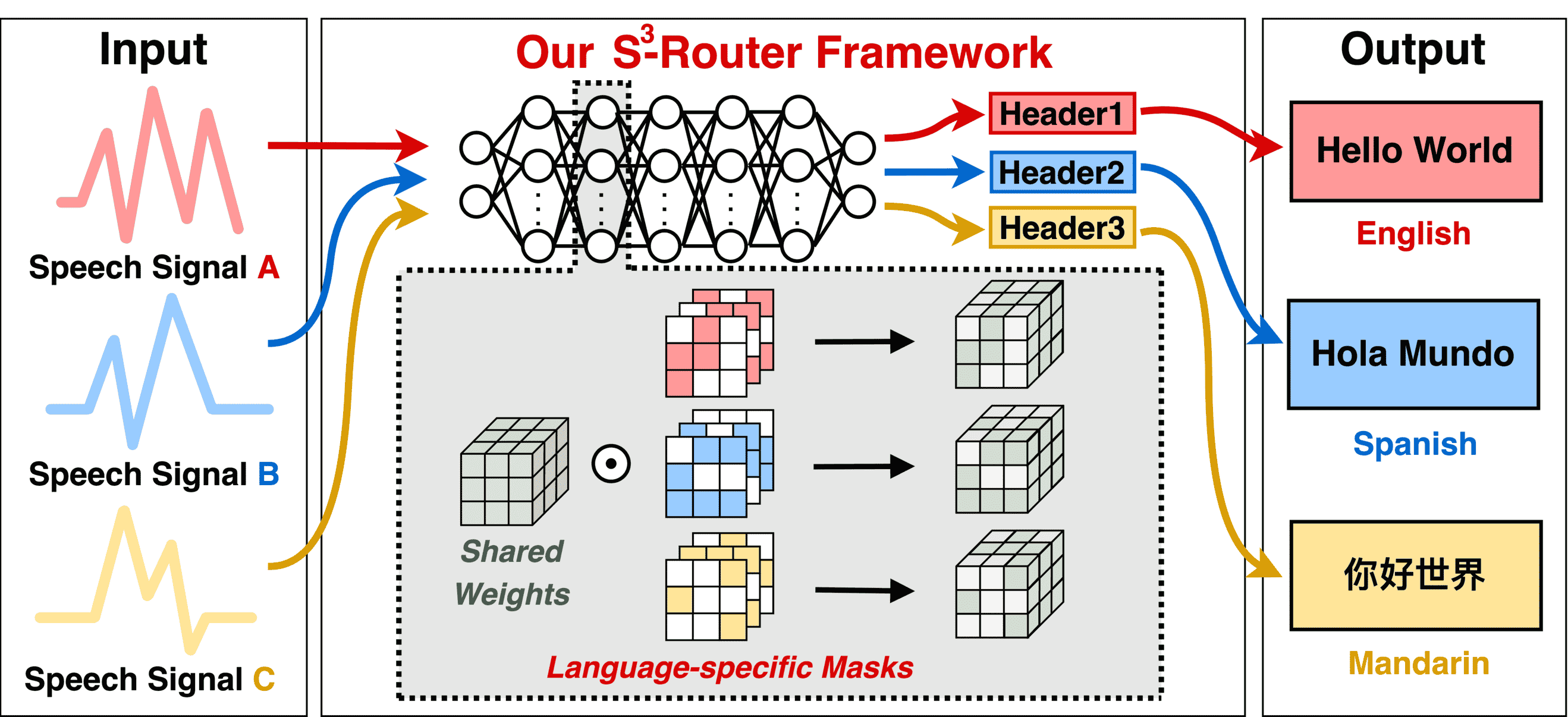

[ICML 2023] Master-ASR: Achieving Multilingual Scalability and Low-Resource Adaptation in ASR with Modularized Learning

Zhongzhi Yu, Yang Zhang, Kaizhi Qian, Cheng Wan, Yonggan Fu, Yongan Zhang, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video] [Project Page]

Zhongzhi Yu, Yang Zhang, Kaizhi Qian, Cheng Wan, Yonggan Fu, Yongan Zhang, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video] [Project Page]

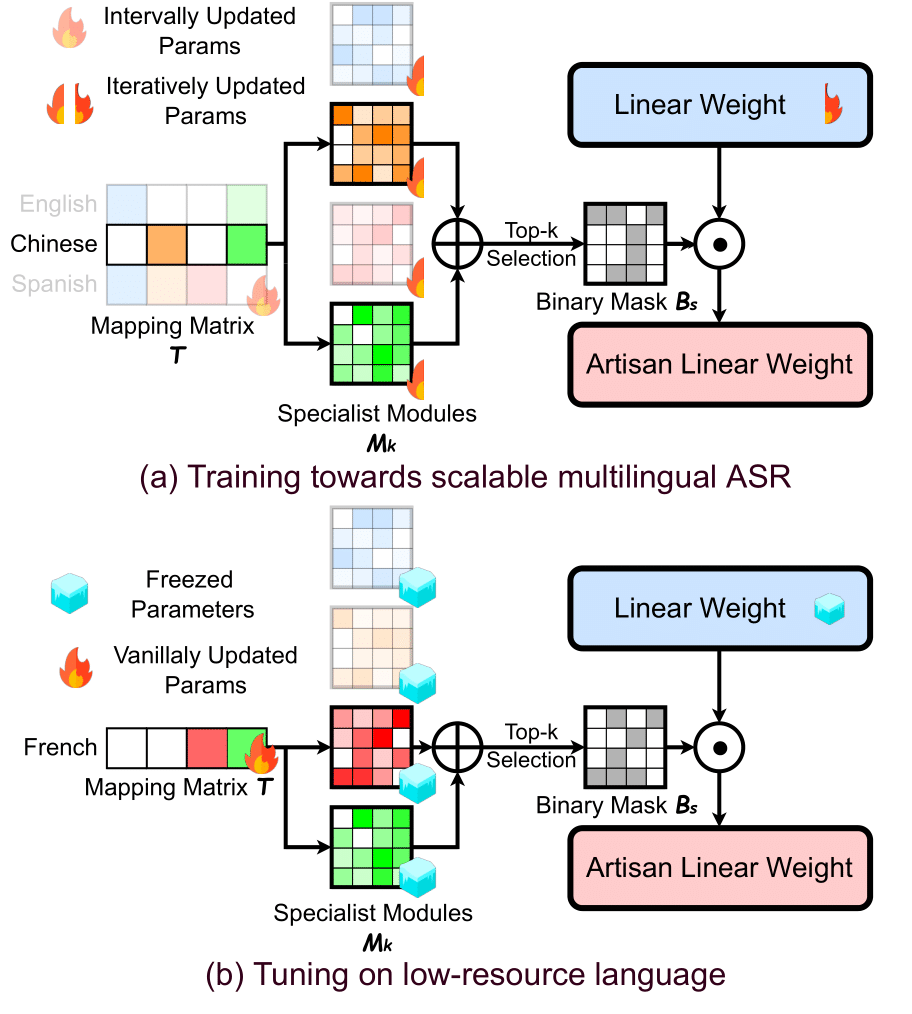

Despite the impressive performance achieved by automatic speech recognition (ASR) recently, we observe that there are still two challenges in ASR, hindering its wider applications: (1) the difficulty of introducing scalability into the model for supporting more languages with limited training, inference, and storage overhead, and (2) the low-resource adaptation ability to enable effective low-resource adaptation while avoiding over-fitting and catastrophic forgetting issues. Inspired by the recent findings, we hypothesize that we can tackle the above challenges with widely shared modules across languages. To this end, we propose an ASR framework, dubbed Master-ASR, that, for the first time, simultaneously achieves strong multilingual scalability and low-resource adaptation ability in a modularized-then-assemble manner. Specifically, Master-ASR learns a small set of generalized sub-modules and adaptively assembles them for different languages to reduce the multilingual overhead and enable effective knowledge transfer for low-resource adaptation. Extensive experiments and visualizations prove that Master-ASR can effectively discover language similarity and improve multilingual and low-resource ASR performance over state-of-the-art (SOTA) methods (e.g., a 0.13~2.41 lower CER with 30% less inference overhead over SOTA solutions on multilingual ASR and a comparable CER with nearly 500 times less trainable parameters over SOTA solutions on low-resource tuning, respectively).

[ICML 2023] NeRFool: Uncovering the Vulnerability of Generalizable Neural Radiance Fields against Adversarial Perturbations

Yonggan Fu, Ye Yuan, Souvik Kundu, Shang Wu, Shunyao Zhang, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video]

Yonggan Fu, Ye Yuan, Souvik Kundu, Shang Wu, Shunyao Zhang, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video]

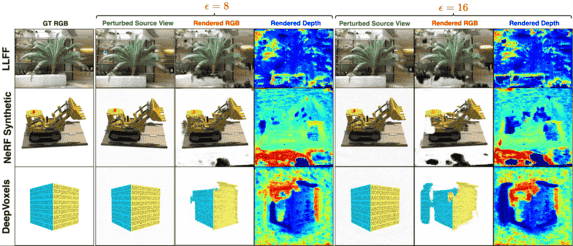

Generalizable Neural Radiance Fields (GNeRF) are one of the most promising real-world solutions for novel view synthesis, thanks to their cross-scene generalization capability and thus the possibility of instant rendering on new scenes. While adversarial robustness is essential for real-world applications, little study has been devoted to understanding its implication on GNeRF. We hypothesize that because GNeRF is implemented by conditioning on the source views from new scenes, which are often acquired from the Internet or third-party providers, there are potential new security concerns regarding its real-world applications. Meanwhile, existing understanding and solutions for neural networks' adversarial robustness may not be applicable to GNeRF, due to its 3D nature and uniquely diverse operations. To this end, we present NeRFool/NeRFool$^+$, which to the best of our knowledge are the first works that set out to understand the adversarial robustness of GNeRF. Specifically, NeRFool unveils the vulnerability patterns and important insights regarding GNeRF's adversarial robustness; Built upon the above insights gained from NeRFool, we further develop NeRFool$^+$, which integrates three techniques that can effectively attack GNeRF across a wide range of target views, and provide guidelines for defending against our proposed NeRFool$^+$ attacks. We believe that our NeRFool/NeRFool$^+$ lays the initial foundation for future innovations in developing robust real-world GNeRF solutions.

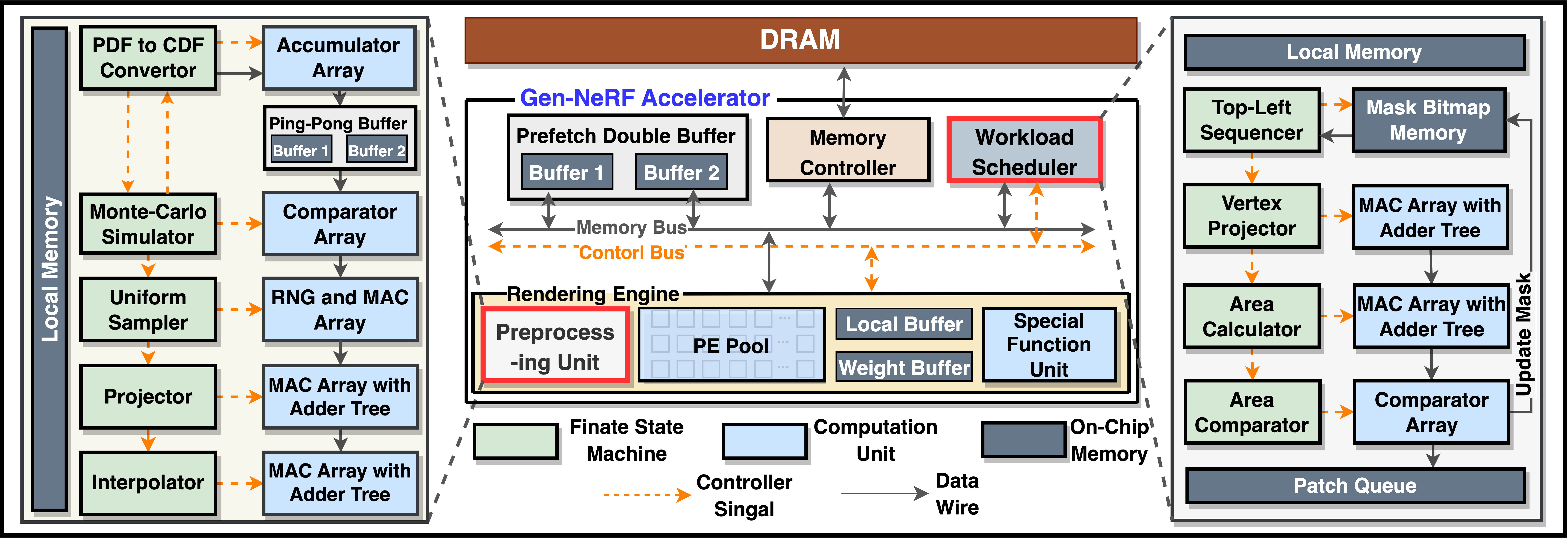

[ISCA 2023] Gen-NeRF: Efficient and Generalizable Neural Radiance Fields via Algorithm-Hardware Co-Design

Yonggan Fu, Zhifan Ye, Jiayi Yuan, Shunyao Zhang, Sixu Li, Haoran You, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video]

Yonggan Fu, Zhifan Ye, Jiayi Yuan, Shunyao Zhang, Sixu Li, Haoran You, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video]

Novel view synthesis is an essential functionality for enabling immersive experiences in various Augmented- and Virtual-Reality (AR/VR) applications like the Metaverse, for which Neural Radiance Field (NeRF) has emerged as the state-of-the-art technique. In particular, generalizable NeRFs have gained increasing popularity thanks to their cross-scene generalizable capability, which enables NeRFs to be instantly serviceable for new scenes without per-scene training. Despite their promise, generalizable NeRFs aggravate the prohibitive complexity of NeRFs due to extra memory accesses needed to acquire scene features, causing NeRFs’ ray marching process to be memory-bounded. To this end, we propose Gen-NeRF, an algorithm-hardware co-design framework dedicated to generalizable NeRF acceleration, aiming to win both rendering efficiency and generalization capability in NeRFs. To the best of our knowledge, Gen-NeRF is the first to enable real-time generalizable NeRFs, demonstrating a promising NeRF solution for next-generation AR/VR devices. On the algorithm side, Gen-NeRF integrates a coarse-then-focus volume sampling strategy, leveraging the fact that different regions of a 3D scene can feature diverse sparsity ratios depending on where the objects are located in the scene to enable sparse yet effective sampling. On the hardware side, Gen-NeRF highlights an accelerator micro-architecture to maximize the data reuse opportunities among different rays by making use of their epipolar geometric relationship. Furthermore, our Gen-NeRF accelerator features a customized dataflow to enhance data locality during point-to-hardware mapping and an optimized scene feature storage strategy to minimize memory bank conflicts across camera rays. Extensive experiments validate Gen-NeRF's effectiveness in enabling real-time and generalizable novel view synthesis.

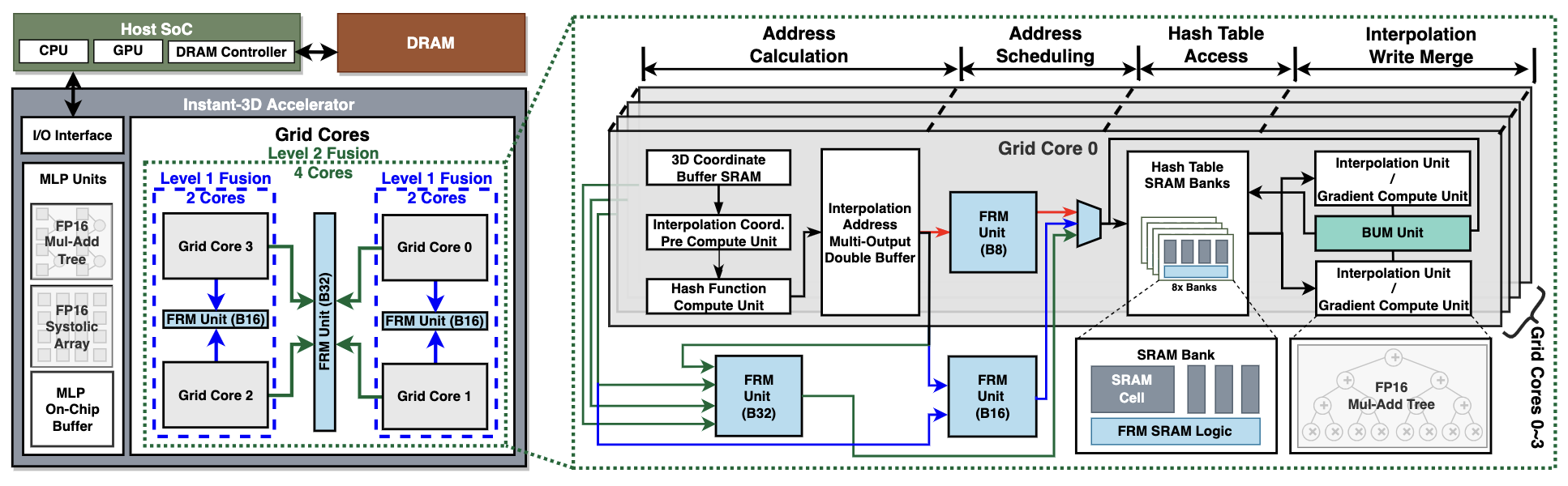

[ISCA 2023] Instant-3D: Instant Neural Radiance Fields Training Towards Real-Time AR/VR 3D Reconstruction

Sixu Li, Chaojian Li, Wenbo Zhu, Boyang (Tony) Yu, Yang Zhao, Cheng Wan, Haoran You, Huihong Shi, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video]

Sixu Li, Chaojian Li, Wenbo Zhu, Boyang (Tony) Yu, Yang Zhao, Cheng Wan, Haoran You, Huihong Shi, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video]

Neural Radiance Field (NeRF) based 3D reconstruc- tion is highly desirable for immersive Augmented and Virtual Reality (AR/VR) applications, but instant (i.e., < 5 seconds) on- device NeRF training remains a challenge. In this work, we first identify the inefficiency bottleneck: the need to interpolate NeRF embeddings up to 200,000 times from a 3D embedding grid dur- ing each training iteration. To alleviate this, we propose Instant- 3D, an algorithm-hardware co-design acceleration framework that achieves instant on-device NeRF training. Our algorithm decomposes the embedding grid representation in terms of color and density, enabling computational redundancy to be squeezed out by adopting different (1) grid sizes and (2) update frequencies for the color and density branches. Our hardware accelerator further reduces the dominant memory accesses for embedding grid interpolation by (1) mapping multiple nearby points’ mem- ory read requests into one during the feed-forward process, (2) merging embedding grid updates from the same sliding time window during back-propagation, and (3) fusing different computation cores to support different grid sizes needed by the color and density branches of Instant-3D algorithm. Extensive experiments validate the effectiveness of our proposed Instant- 3D, achieving a large training runtime reduction of 41× - 248× while maintaining the same reconstruction quality. Excitingly, Instant-3D has fulfilled the goal of instant 3D reconstruction for AR/VR, requiring only 1.6 seconds per scene and meeting the AR/VR power consumption constraint of 1.9 W.

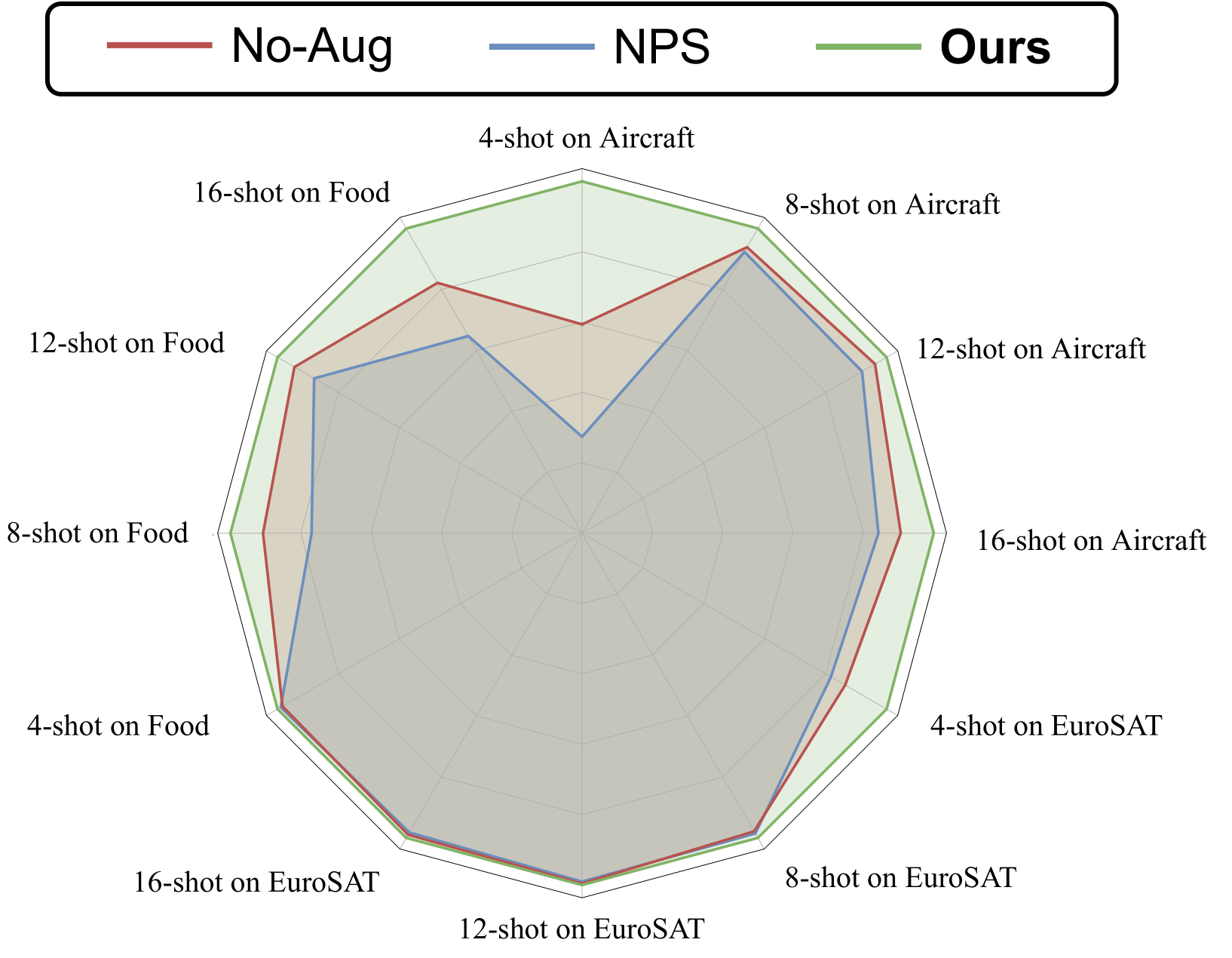

[CVPR 2023] Hint-Aug: Drawing Hints from Vision Foundation Models towards Boosted Few-shot Parameter-Efficient ViT Tuning

Zhongzhi Yu, Shang Wu, Yonggan Fu, Cheng Wan, Shunyao Zhang, Chaojian Li, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Project Page]

Zhongzhi Yu, Shang Wu, Yonggan Fu, Cheng Wan, Shunyao Zhang, Chaojian Li, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Project Page]

Despite the promise of finetuning foundation vision transformers (ViTs) on downstream tasks, existing techniques are still not satisfactory under few-shot scenarios due to the data-hungry nature of ViTs. To tackle this, previous data augmentation techniques fall short because of the limited features contained in few-shot training data. We first identify an opportunity for few-shot tuning foundation ViT models: pretrained ViTs themselves have already learned highly representative features which are not updated during finetuning. We thus hypothesize the possibility of leveraging those learned features to augment the few-shot training data for boosting the effectiveness of finetuning pretrained foundation ViTs. To this end, we propose a framework called Hint-based Data Augmentation (Hint-Aug), which is dedicated to boosting the effectiveness of few-shot tuning foundation ViT models. Hint-Aug is achieved by augmenting the over-fitted parts of training samples with the learned features of pretrained ViT models. Specifically, Hint-Aug integrates two key enablers: (1) an Attentive Over-fitting Detector (AOD) to detect over-confident patches of foundation ViTs for potentially alleviating their over-fitting of the few-shot training set and (2) a Confusion-based Feature Infusion (CFI) module to infuse easy-to-confuse features from the given pretrained foundation ViT with the aforementioned detected over-confident patches for enhancing the feature diversity during finetuning. Extensive experiments and ablation studies on seven datasets, and three parameter-efficient tuning techniques consistently validate Hint-Aug's effectiveness: 0.04% ~ 6.89% higher accuracy over the state-of-the-art (SOTA) data augmentation methods. Specifically, on the Pet dataset, Hint-Aug achieves a 2.22% higher accuracy with 50% less training data over SOTA data augmentation methods.

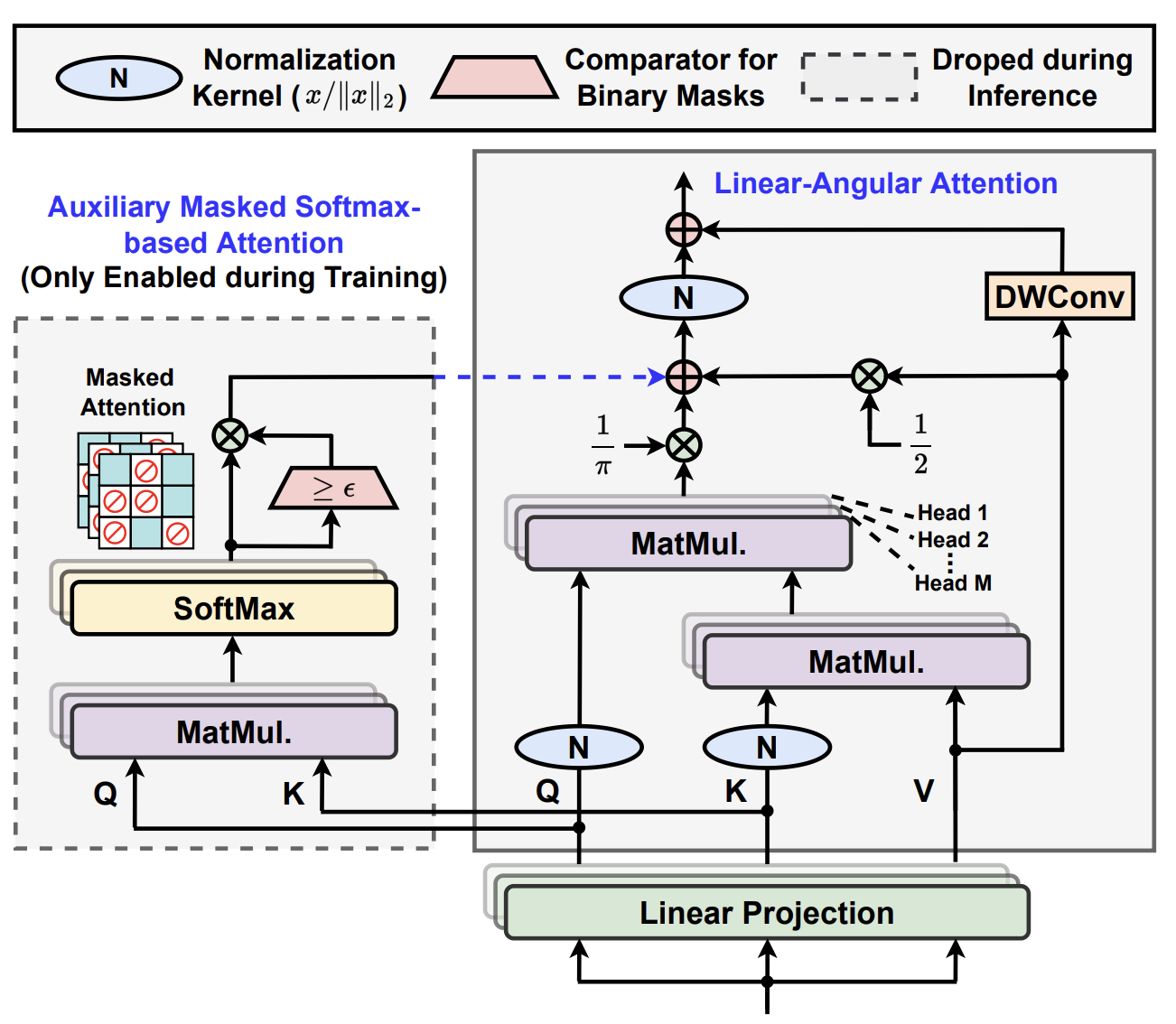

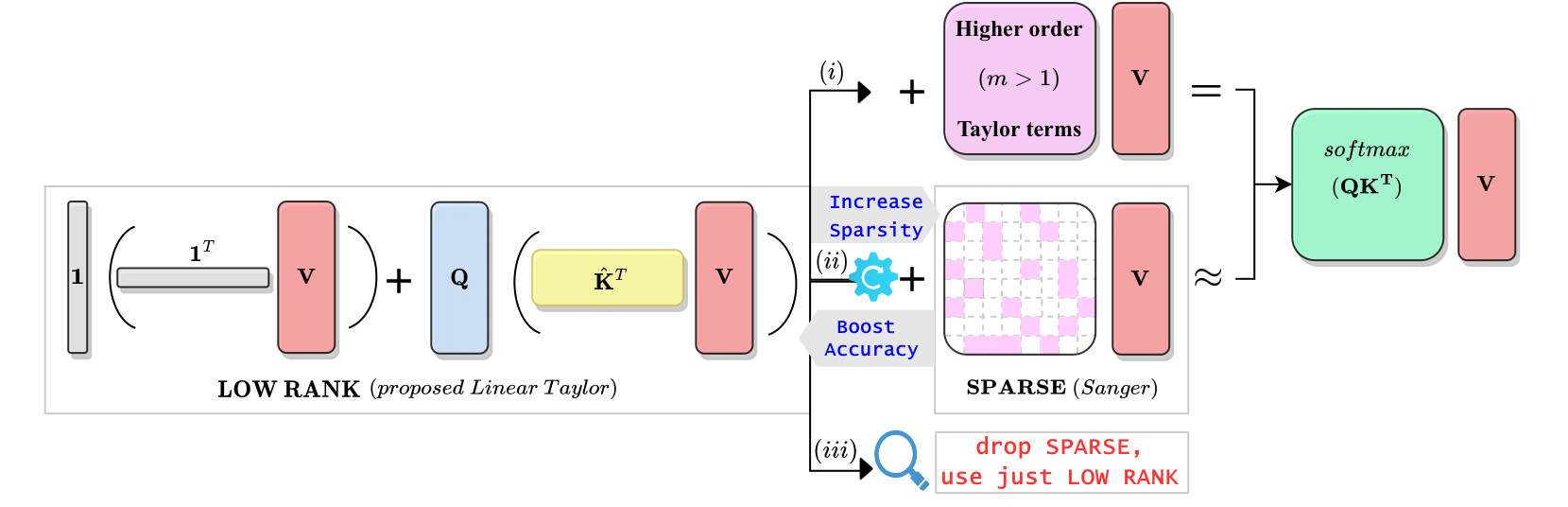

[CVPR 2023] Castling-ViT: Compressing Self-Attention via Switching Towards Linear-Angular Attention During Vision Transformer Inference

Haoran You, Yunyang Xiong, Xiaoliang Dai, Bichen Wu, Peizhao Zhang, Haoqi Fan, Peter Vajda, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video] [Project Page] [Slides]

Haoran You, Yunyang Xiong, Xiaoliang Dai, Bichen Wu, Peizhao Zhang, Haoqi Fan, Peter Vajda, and Yingyan (Celine) Lin

[Abstract] [Paper] [Code] [Video] [Project Page] [Slides]

Vision Transformers (ViTs) have shown impressive performance but still require a high computation cost as compared to convolutional neural networks (CNNs), due to the global similarity measurements and thus a quadratic complexity with the input tokens. Existing efficient ViTs adopt local attention (e.g., Swin) or linear attention (e.g., Performer), which sacrifice ViTs' capabilities of capturing either global or local context. In this work, we ask an important research question: Can ViTs learn both global and local context while being more efficient during inference? To this end, we propose a framework called Castling-ViT, which trains ViTs using both linear-angular attention and masked softmax-based quadratic attention, but then switches to having only linear angular attention during ViT inference. Our Castling-ViT leverages angular kernels to measure the similarities between queries and keys via spectral angles. And we further simplify it with two techniques: (1) a novel linear-angular attention mechanism: we decompose the angular kernels into linear terms and high-order residuals, and only keep the linear terms; and (2) we adopt two parameterized modules to approximate high-order residuals: a depthwise convolution and an auxiliary masked softmax attention to help learn both global and local information, where the masks for softmax attention are regularized to gradually become zeros and thus incur no overhead during ViT inference. Extensive experiments and ablation studies on three tasks consistently validate the effectiveness of the proposed Castling-ViT, e.g., achieving up to a 1.8% higher accuracy or 40% MACs reduction on ImageNet classification and 1.2 higher mAP on COCO detection under comparable FLOPs, as compared to ViTs with vanilla softmax-based attentions.

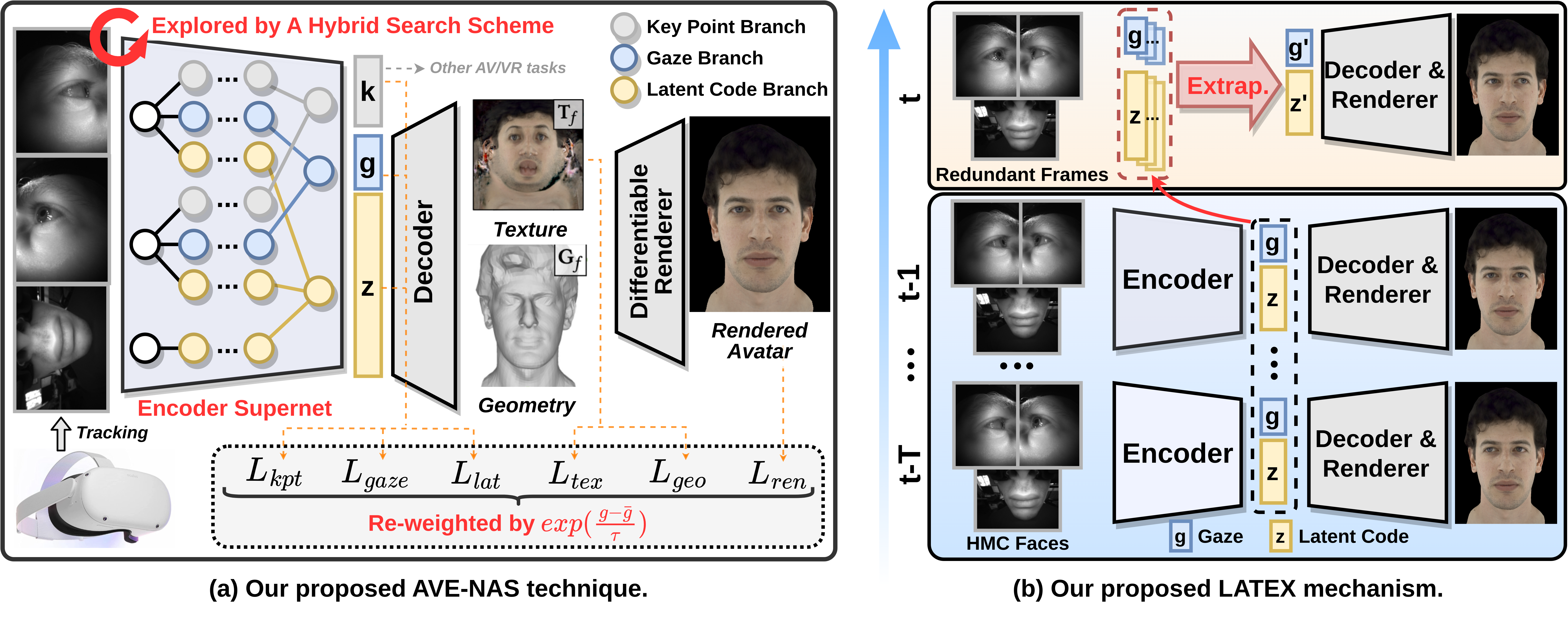

[CVPR 2023] Auto-CARD: Efficient and Robust Codec Avatar Driving for Real-time Mobile Telepresence

Yonggan Fu, Yuecheng Li, Chenghui Li, Jason Saragih, Peizhao Zhang, Xiaoliang Dai, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video]

Yonggan Fu, Yuecheng Li, Chenghui Li, Jason Saragih, Peizhao Zhang, Xiaoliang Dai, and Yingyan (Celine) Lin

[Abstract] [Paper] [Video]

Real-time and robust photorealistic avatars for telepresence in AR/VR have been highly desired for enabling immersive photorealistic telepresence. However, there still exists one key bottleneck: the considerable computational expense needed to accurately infer facial expressions captured from headset-mounted cameras with a quality level that can match the realism of the avatar's human appearance. To this end, we propose a framework called Auto-CARD, which for the first time enables real-time and robust driving of Codec Avatars when exclusively using merely on-device computing resources. This is achieved by minimizing two sources of redundancy. First, we develop a dedicated neural architecture search technique called AVE-NAS for avatar encoding in AR/VR, which explicitly boosts both the searched architectures' robustness in the presence of extreme facial expressions and hardware friendliness on fast evolving AR/VR headsets. Second, we leverage the temporal redundancy in consecutively captured images during continuous rendering and develop a mechanism dubbed LATEX to skip the computation of redundant frames. Specifically, we first identify an opportunity from the linearity of the latent space derived by the avatar decoder and then propose to perform adaptive latent extrapolation for redundant frames. For evaluation, we demonstrate the efficacy of our Auto-CARD framework in real-time Codec Avatar driving settings, where we achieve a 5.05$\times$ speed-up on Meta Quest 2 while maintaining a comparable or even better animation quality than state-of-the-art avatar encoder designs.

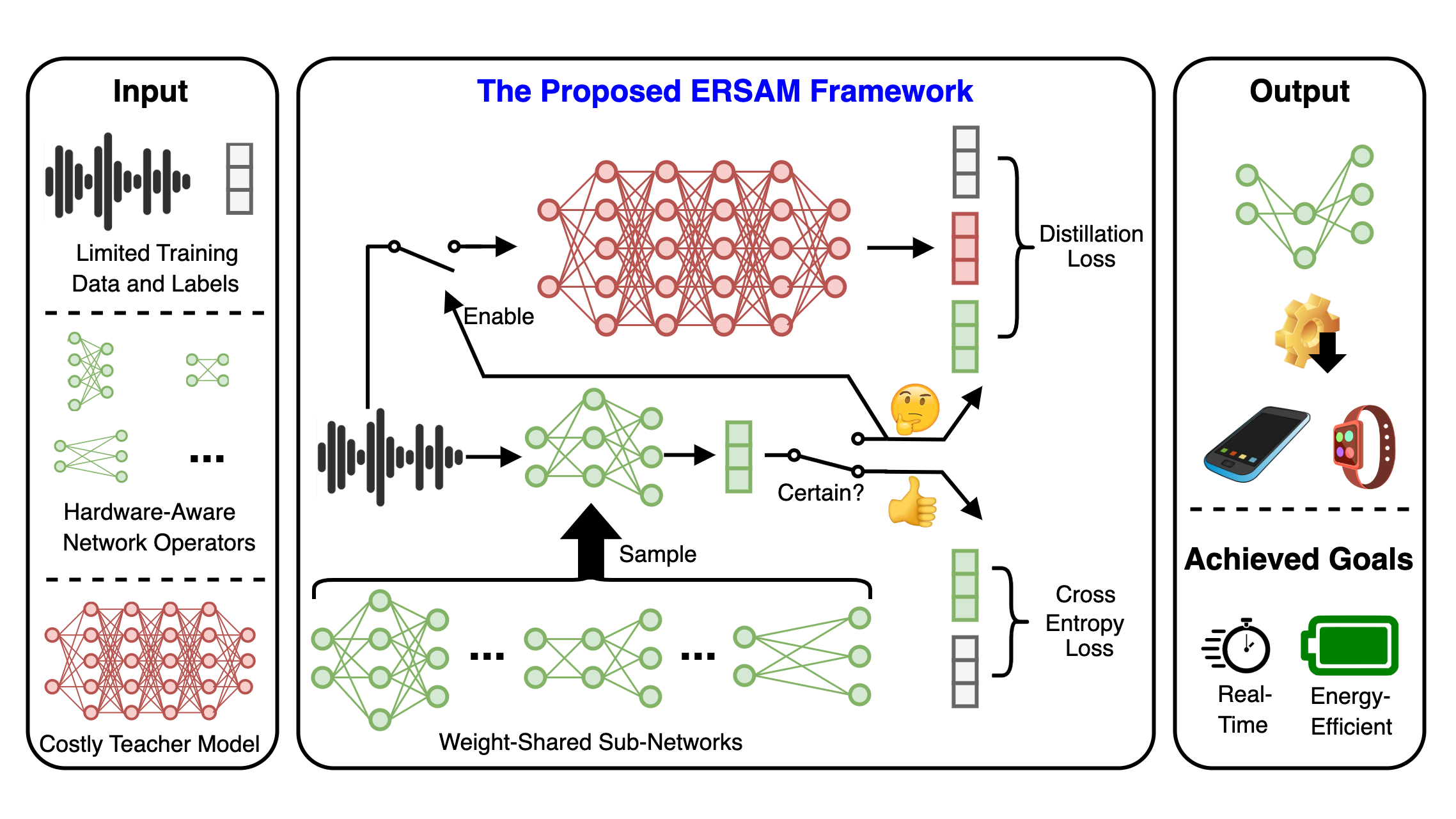

[ICASSP 2023] ERSAM: Neural Architecture Search For Energy-Efficient and Real-Time Social Ambiance Measurement

Chaojian Li, Wenwan Chen, Jiayi Yuan, Yingyan (Celine) Lin, and Ashutosh Sabharwal

[Abstract] [Paper]

Chaojian Li, Wenwan Chen, Jiayi Yuan, Yingyan (Celine) Lin, and Ashutosh Sabharwal

[Abstract] [Paper]

Social ambiance describes the context in which social interac- tions happen, and can be measured using speech audio by counting the number of the concurrent speakers. This measurement has en- abled various mental health tracking and human-centric IoT applica- tions. While on-device Socal Ambiance Measure (SAM) is highly desirable to ensure user privacy and thus facilitate wide adoption of the aforementioned applications, the required computational com- plexity of state-of-the-art deep neural networks (DNNs) powered SAM solutions stands at odds with the often constrained resources on mobile devices. Furthermore, only limited labeled data is avail- able or practical when it comes to SAM under clinical settings due to various privacy constraints and the required human effort, further challenging the achievable accuracy of on-device SAM solutions. To this end, we propose a dedicated neural architecture search frame- work for Energy-efficient and Real-time SAM (ERSAM). Specifi- cally, our ERSAM framework can automatically search for DNNs that push forward the achievable accuracy vs. hardware efficiency frontier of mobile SAM solutions. For example, ERSAM-delivered DNNs only consume 40 mW · 12 h energy and 0.05 seconds pro- cessing latency for a 5 seconds audio segment on a Pixel 3 phone, while only achieving an error rate of 14.3% on a social ambiance dataset generated by LibriSpeech. We can expect that our ERSAM framework can pave the way for ubiquitous on-device SAM solu- tions which are in growing demand.

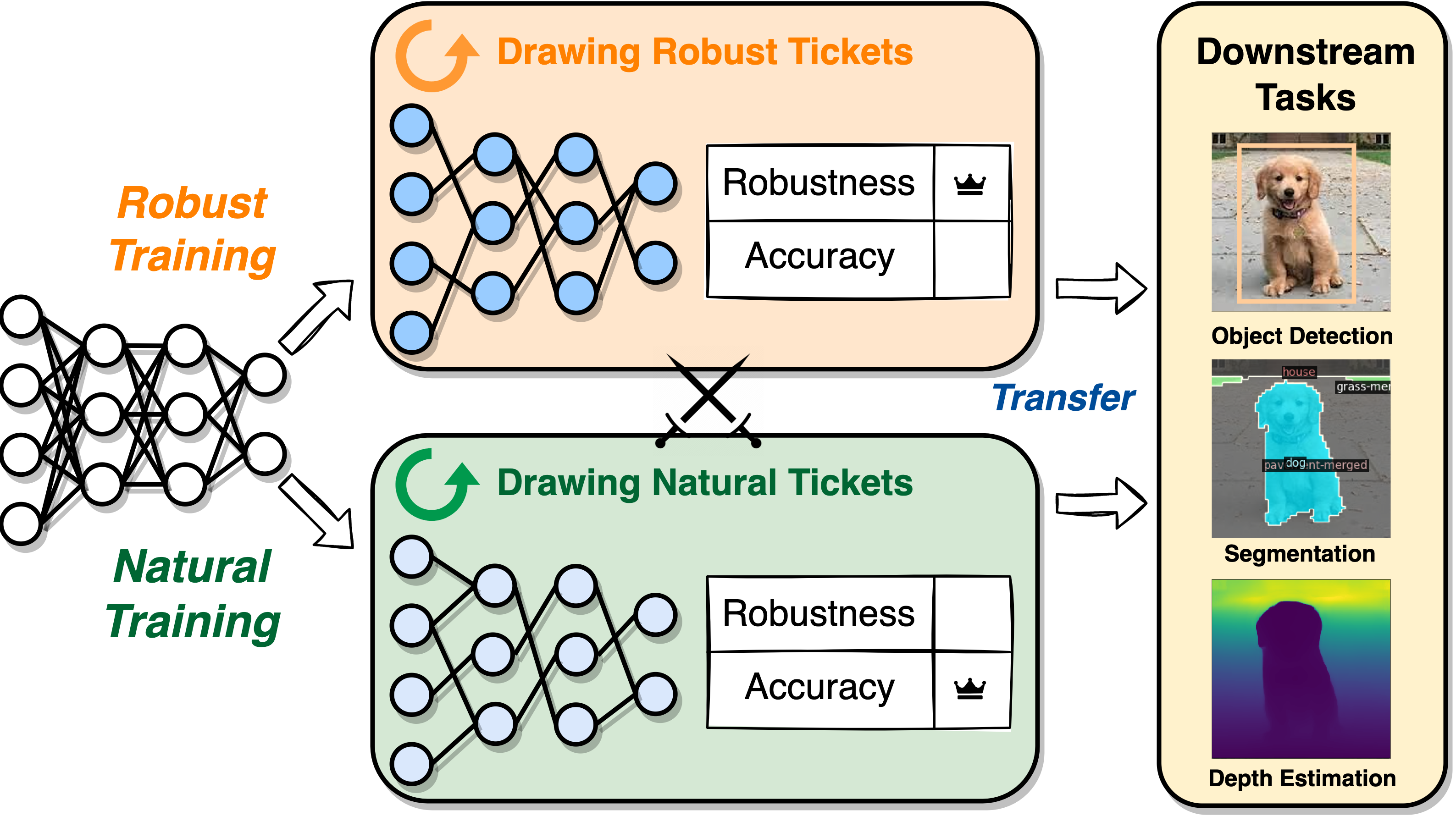

[DAC 2023] Robust Tickets Can Transfer Better: Drawing More Transferable Subnetworks in Transfer Learning

Yonggan Fu, Ye Yuan, Shang Wu, Jiayi Yuan, and Yingyan (Celine) Lin

[Abstract] [Paper]

Yonggan Fu, Ye Yuan, Shang Wu, Jiayi Yuan, and Yingyan (Celine) Lin

[Abstract] [Paper]

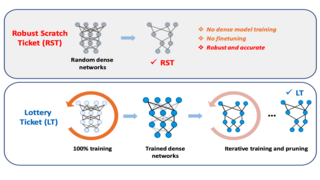

Transfer learning leverages feature representations of deep neural networks (DNNs) pretrained on rich source tasks to empower the finetuning on downstream tasks, whereas pretrained models are often prohibitively large for delivering generalizable representations, which limits their deployment on edge devices. To close this gap, driven by the lottery ticket hypothesis, we interestingly find that robust tickets can transfer better, i.e., subnetworks drawn with properly induced adversarial robustness can win better transferability over vanilla lottery tickets. Our proposed transfer learning pipelines can achieve enhanced accuracy-sparsity trade-offs across diverse tasks and sparsity patterns on downstream tasks and enrich the lottery ticket hypothesis.

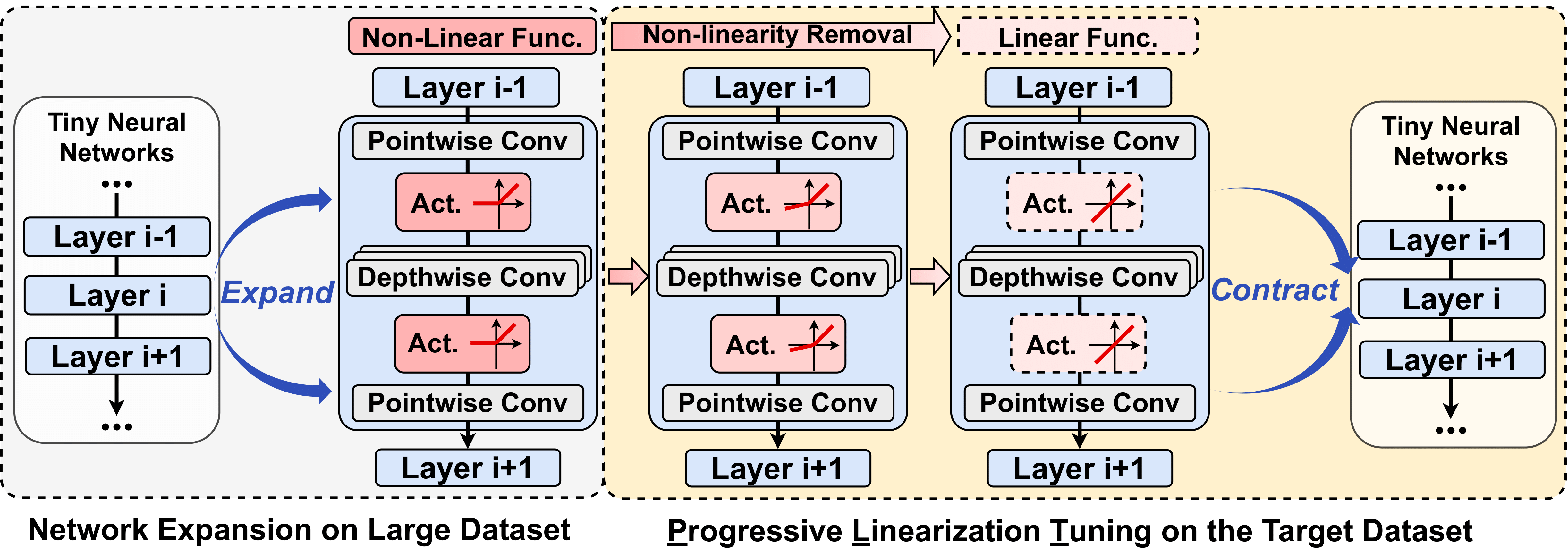

[DAC 2023] NetBooster: Empowering Tiny Deep Learning By Standing on the Shoulders of Deep Giants

Zhongzhi Yu, Yonggan Fu, Jiayi Yuan, Haoran You, and Yingyan (Celine) Lin

[Abstract] [Paper]

Zhongzhi Yu, Yonggan Fu, Jiayi Yuan, Haoran You, and Yingyan (Celine) Lin

[Abstract] [Paper]

Tiny deep learning has attracted increasing attention driven by the substantial demand for deploying deep learning on numerous intelligent Internet-of-Things devices. However, it is still challenging to unleash tiny deep learning's full potential on both large-scale datasets and downstream tasks due to the under-fitting issues caused by the limited model capacity of tiny neural networks (TNNs). To this end, we propose a framework called NetBooster to empower tiny deep learning by augmenting the architectures of TNNs via an expansion-then-contraction strategy. Extensive experiments show that NetBooster consistently outperforms state-of-the-art tiny deep learning solutions.

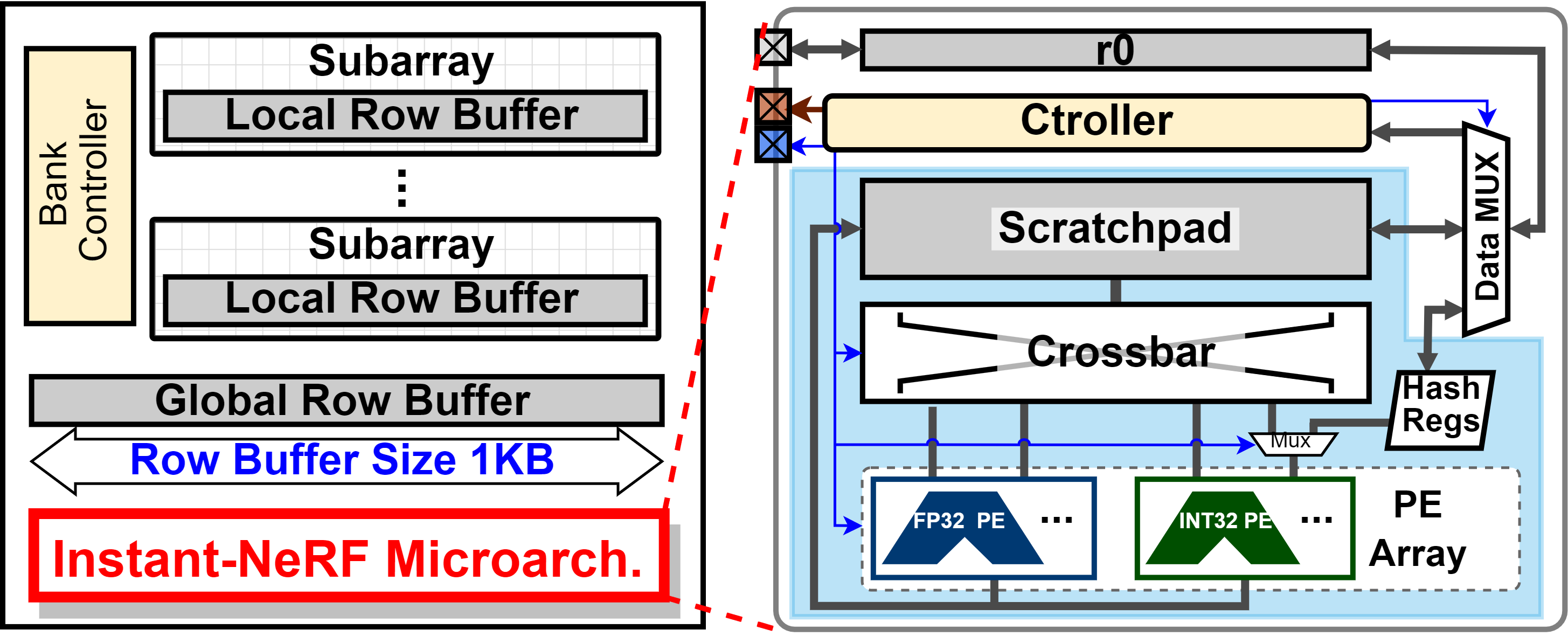

[DAC 2023] Instant-NeRF: Instant On-Device Neural Radiance Field Training via Algorithm-Accelerator Co-Designed Near-Memory Processing

Yang Zhao, Shang Wu, Jingqun Zhang, Sixu Li, Chaojian Li, and Yingyan (Celine) Lin

[Abstract] [Paper]

Yang Zhao, Shang Wu, Jingqun Zhang, Sixu Li, Chaojian Li, and Yingyan (Celine) Lin

[Abstract] [Paper]

Instant on-device Neural Radiance Fields (NeRFs) are in growing demand to unleash the promise of immersive AR/VR experiences, but still limited by their prohibitive training time. Our profiling analysis unveils a memory-bound bottleneck in NeRF training. To tackle this bottleneck, near-memory processing (NMP) promises to be an effective solution, but also faces various challenges due to the unique workloads of NeRFs, including random hash table lookup, random point processing sequence, and heterogeneous bottleneck steps. Therefore, we propose the first NMP framework, Instant-NeRF, dedicated to enabling instant on-device NeRF training. Experiments on eight datasets consistently validate the effectiveness of Instant-NeRF. Index Terms—Neural Radiance Field, Algorithm-Accelerator Co-Design, Near-Memory Processing, On-Device Training.

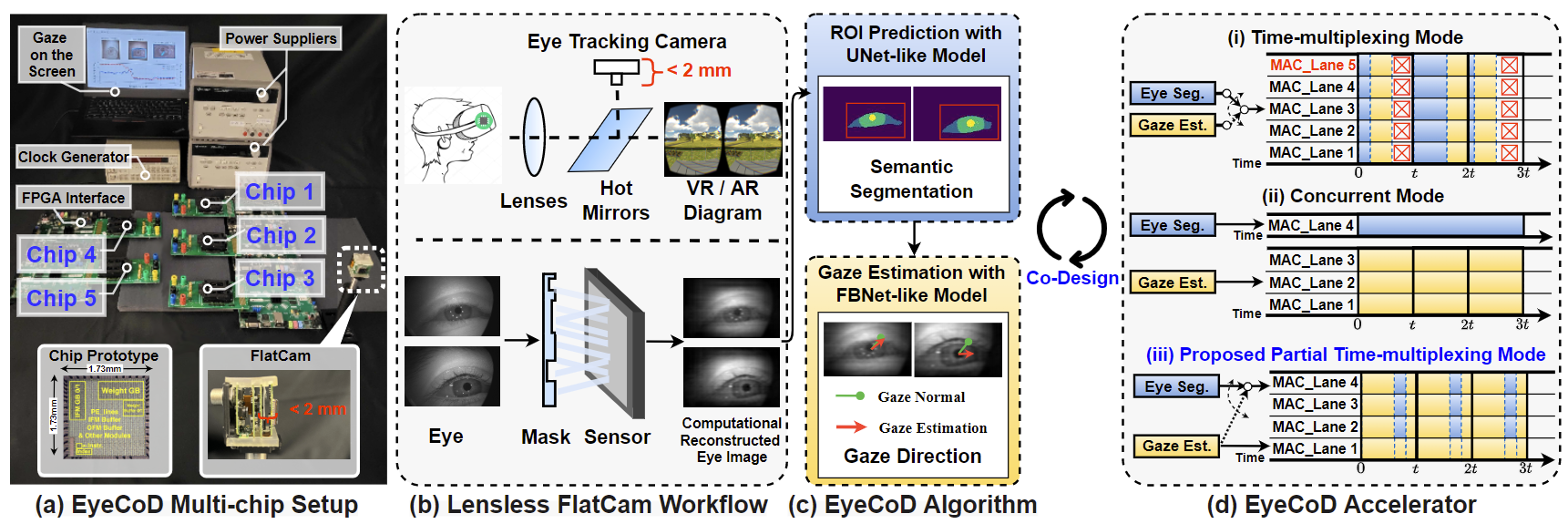

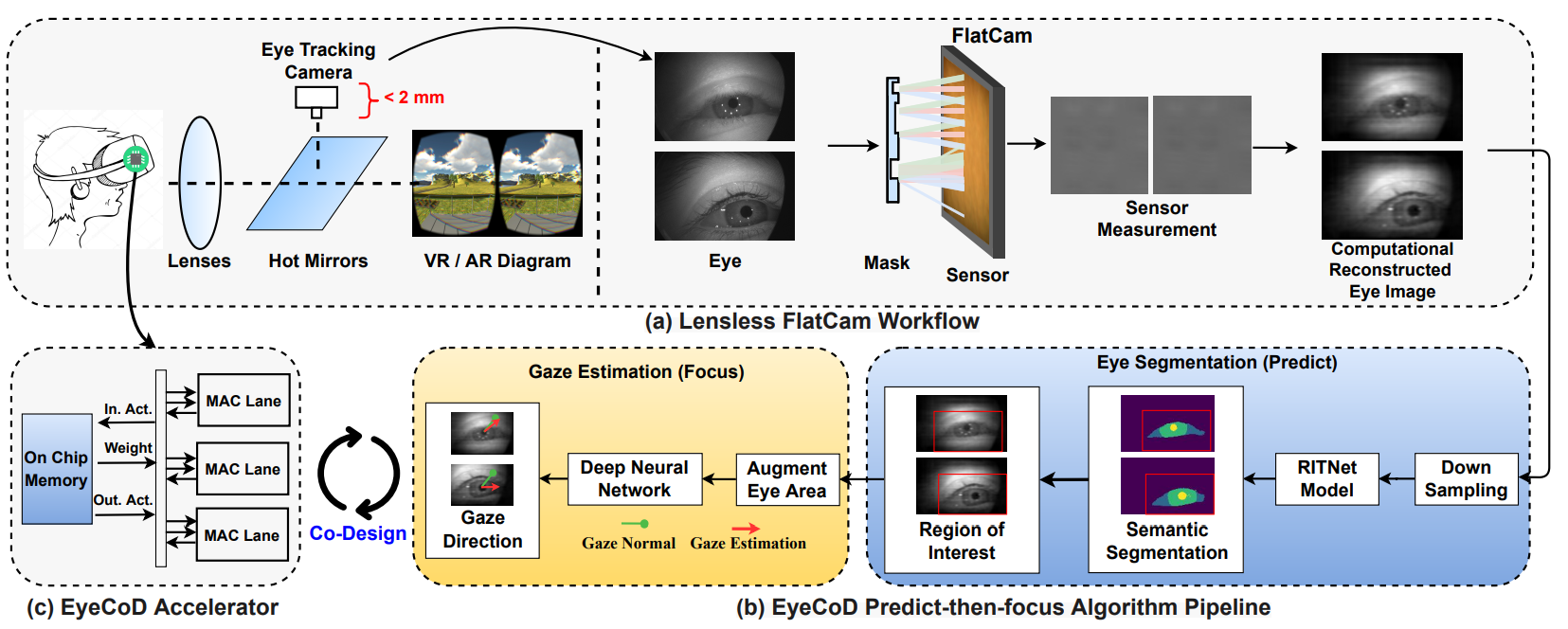

[MICRO TopPick 2023] EyeCoD: Eye Tracking System Acceleration via FlatCam-based Algorithm/Hardware Co-Design

Haoran You*, Cheng Wan*, Yang Zhao*, Zhongzhi Yu*, Yonggan Fu, Jiayi Yuan, Shang Wu, Shunyao Zhang, Yongan Zhang, Chaojian Li, Vivek Boominathan, Ashok Veeraraghavan, Ziyun Li, and Yingyan (Celine) Lin

[Abstract] [Paper] [Project Page]

Haoran You*, Cheng Wan*, Yang Zhao*, Zhongzhi Yu*, Yonggan Fu, Jiayi Yuan, Shang Wu, Shunyao Zhang, Yongan Zhang, Chaojian Li, Vivek Boominathan, Ashok Veeraraghavan, Ziyun Li, and Yingyan (Celine) Lin

[Abstract] [Paper] [Project Page]

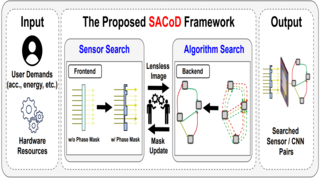

Eye tracking has enabled many virtual and augmented reality (VR/AR) applications and desires high throughput (e.g., 240 FPS), small form-factor, and enhanced visual privacy. Existing eye tracking systems adopt bulky lens-based cameras, suffering from large form-factor and high communication cost between cameras and backend processors. Instead, we devise a lensless FlatCam-based eye tracking algorithm and hardware accelerator co-design framework dubbed EyeCoD, which is the first system to meet the high throughput requirement with smaller form-factor. On the algorithm level, EyeCoD integrates a predict-then-focus pipeline that predicts the region-of-interest (ROI) before gaze estimation. On the hardware level, EyeCoD further attaches a dedicated chip to the camera for accelerating the two machine learning models used in algorithm. Overall, the proposed EyeCoD system wins high throughput, small form-factor, and visual privacy altogether.

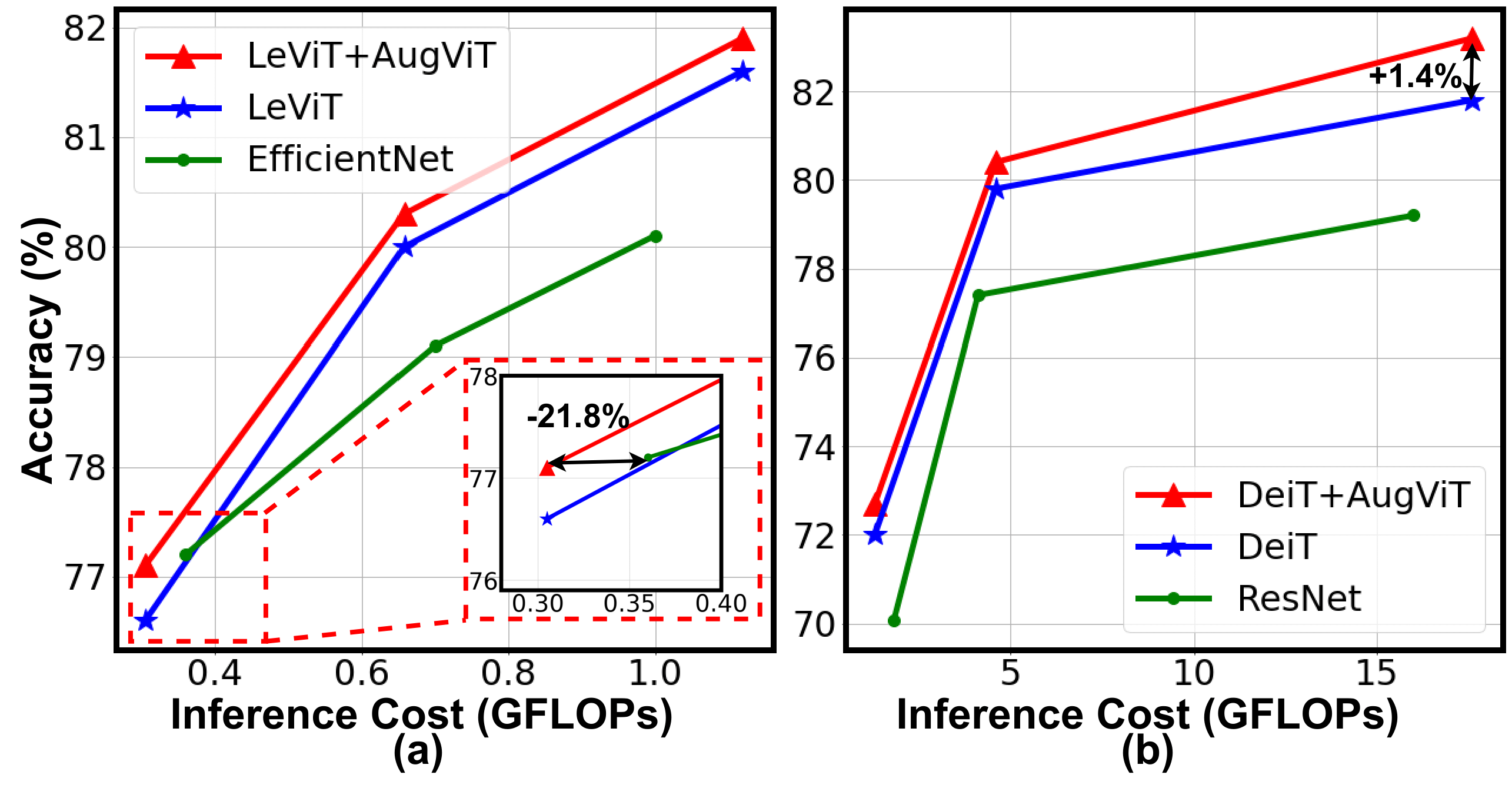

[TinyML 2023] AugViT: Improving Vision Transformer Training by Marrying Attention and Data Augmentation

Zhongzhi Yu, Yonggan Fu, Chaojian Li, and Yingyan (Celine) Lin

[Abstract]

Zhongzhi Yu, Yonggan Fu, Chaojian Li, and Yingyan (Celine) Lin

[Abstract]